At last October’s Embedded Linux Conference Europe, Brent Roman, an embedded software engineer at the Monterey Bay Aquarium Research Institute (MBARI), described the two decade-long evolution of MBARI’s Linux-controlled Environmental Sampler Processor (ESP). Roman’s lessons in reducing power consumption on the remotely deployed, sensor-driven device are applicable to a wide range of remote Internet of Things projects. The take-home lesson: It’s not only about saving power on the device but also about the communications links.

The ESP is designed to quickly identify potential health hazards, such as toxic algae blooms. The microbiological analysis device is anchored offshore and moored at a position between two and 30 meters underwater where algae is typically found. It then performs chemical and genetic assays on water samples. Results are transferred over cable using RS-232 to a 2G modem mounted on a float, which transmits the data back to shore. There are 25 ESP units in existence at oceanographic labs around the world, with four currently deployed.

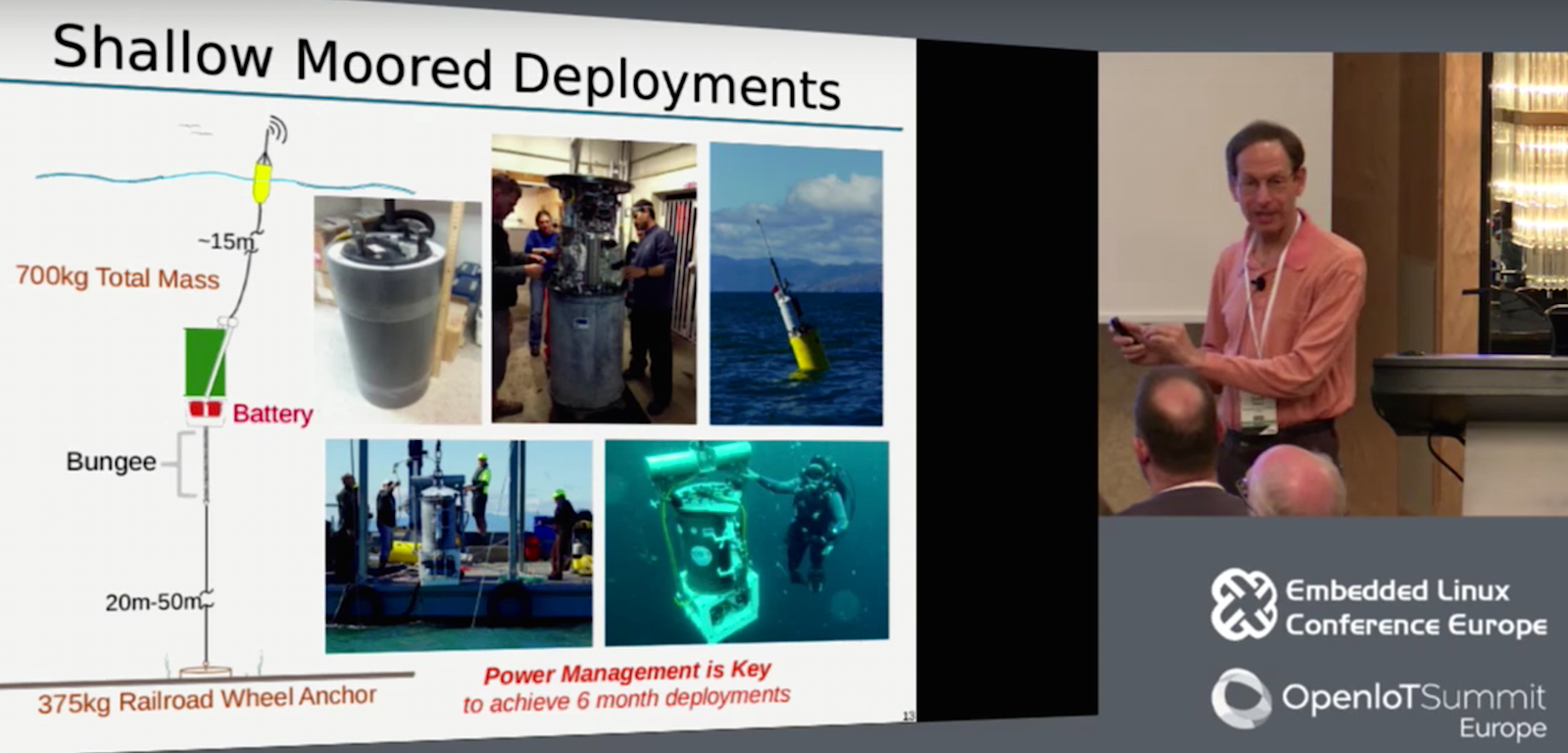

Over the years, Roman and his team have tried to reduce power consumption to extend the duration between costly site visits. Initially, the batteries lasted only about a month, but over time, this was extended to up to six months.

Before the ESP, the only way to determine the quantity and types of algae in Monterey Bay — and whether they were secreting neurotoxins — was to take samples from a boat and then return to shore for study. The analysis took several days from the point of sampling, by which time the ecosystem, including humans, might already be at risk. The ESP’s wirelessly connected automated sampling system provides a much earlier warning while also enabling more extensive oceanographic research.

Development on the ESP began in 1996, with the initial testing in 2002. The key innovation was the development of a system that coordinates the handling of identically sized filter pucks stored on a carousel. “Some pucks filter raw water, some preserve samples, and others facilitate processing for genetic identification,” said Roman “We built a robot that acts as a jukebox for shifting these pucks around.”

The data transmitted back to headquarters consists of monochrome images of the samples. “Fiduciary marks allow us to correlate spots with specific algae and other species such as deep water bacteria and human pathogens,” said Roman.

The ESP unit consists of 10 small servo motors to control the jukebox, plus eight rotary valves and 20 solenoid valves. It runs entirely on 360 D Cell batteries. “D Cells are really energy dense, with as much energy per Watt as Li-Ion, but are much safer, cheaper, and more recyclable,” said Roman. “But the total energy budget was still 6kW hours.”

The original system launched in 2002 ran Linux 2.4 on a Technologic Systems TS-7200 computer-on-module with a 200MHz ARM9 processor, 64MB RAM, and 16MB NOR flash. To extend battery life, Roman first focused on reducing the power load of a separate microcontroller that drove the servo motors.

“When we started building the system in 2002 we discovered that the microcontrollers that were designed for DC servos required buses like CAN and RS-485 with quiescent power draw in the Watt range,” said Roman. “With 10 servos, we would have a 10W quiescent load, which would quickly blow our energy budget. So we developed our own microcontroller using a multi-master I2C bus that we got down to 70mW per every two motors, or less than half a Watt for motor control.”

Even then, the ESP’s total 3W idle power draw limited the device to 70 days between recharge instead of their initial 180-day goal. At first, 70 days was plenty. “We were lucky if we lasted a few weeks before something jammed or leaked and we had to go out and repair it,” said Roman. “But after a few years it became more reliable, and we needed longer battery life.”

The core problem was that the device used up considerable power to keep the system partially awake. “We have to wait for the algae to come to the ESP, which means waiting until the sensors tell us we should take a sample.” said Roman. “Also, if the scientists spot a possible algae bloom in a satellite photo, they may want to radio the device to fire off a sample. The waiting game was killing us.”

In 2014, MBARI updated the system to run Linux 2.6 on a lower power PC/104 carrier board they designed in house. The board integrated a more efficient Embedded Artists LPC3141 module with a 270MHz ARM9 CPU, 64MB RAM, and 256MB NAND.

The ESP design remained the same. An I2C serial bus links to the servo controllers, which are turned off during idle. Three RS-232 links connect to the sensors, and also communicate with the float’s 500mW cell modem. “RS-232 uses very little power and you can run it beyond recommended limits at up to 20 meters,” said Roman.

In 2014, when they mounted the more power-efficient LPC3141 enabled carrier as a drop-in replacement, the computer’s idle time draw was reduced from almost 2.5W to 0.25W. Overall, the ESP system dropped from 3W to 1W idle power, which extended battery life to 205 days, or almost seven months.

The ESP enters rougher water

Monterey Bay is sufficiently sheltered to permit mooring the ESP about 10 meters below the surface. In more exposed ocean locations, however, the device needs to sit deeper to avoid “being pummeled by the waves,” said Roman.

MBARI has collaborated with other oceanographic research institutions to modify the device accordingly. Woods Hole Oceanographic Institution (WHOI), for example, began deploying ESPs off the coast of Maine. “WHOI needed a larger mooring about 25 meters below the surface,” said Roman. “The problem was that the algae were still way up above it, so they used a stretch rubber hose to pump the water down to the ESP.”

The greater distance required a switch from RS-232 to DSL, which boosted idle power draw to more than 8W. “Even when we retrofitted these units with the lower power CPU board, they only dropped from 8W to 6W, or only 60 days duration,” said Roman.

The Scripps Institute in La Jolla, California had a similar problem, as they were launching the ESP in the exposed coastal waters of the Pacific. Scripps similarly opted for a stretch hose, but used more power efficient RS-422 instead of DSL. This traveled farther than RS-232, supporting both the 10-meter stretch hose and the 65-meter link to the float.

RS-422 draws more current than RS-232, however, limiting them to 85 days. Roman considered a plan to suspend the CPU image to RAM. However, since the CPU was already very power efficient, “the energy you’re using to keep the RAM image refreshed is a fairly big part of the total, so we would have only gained 15 days,” said Roman. He also considered suspending to disk, but decided against it due to flash wear issues, and the fact that the Linux 2.6 ARM kernel they used did not support disk hibernation.

Ultimately, tradeoffs in functionality were required. “For Scripps, we made the whole system power on based on time rather than sensor input, so we could shut down the power until it received a radio command,” said Roman.

Due to the need to keep the radio on, even this yielded only enough power for 140 days. Roman dug into the AT command set of the 2G modems and found a deep sleep standby option that essentially uses the modems as pagers. The solution reduced power from 500Mw to 100Mw for the modem, or 200Mw overall.

The University of Washington came up with an entirely different solution to enable a deeper ESP mooring. “Rather than using an expensive stretch hose, they tried a 40-meter Cat5 cable to the surface, enabling an Ethernet connection that was more than 100 times faster than RS-232,” said Roman. This setup required a computer at both ends, however, as well as a cellular router that added 2-3 Watts.

Roman then came up with the idea to run USB signals over Cat5, avoiding the need for additional computers and routers while still enabling high-bandwidth communications. For this deployment, he used an Icron 1850 Cat5 USB extender, which he says works reliably at over 50 meters. The extender adds 400mW, plus another 150mW for the hub on the ESP.

Roman also described future plans to add energy harvesting to recharge the batteries. So far, putting a solar panel on the float seems to be the best solution due to the ease of maintenance. The downside to a solar panel is that the wind can more easily tip over the float. A larger float might help.

In summarizing all these projects, Roman concluded that reducing power consumption was a more complex problem than they had imagined. “We worried a lot about active consumption, but should have spent more time on passive, which we finally addressed,” said Roman. “But the real lesson was how important it was to look at the communications power consumption.”

For additional details, watch the full video below:

Embedded Linux Conference + OpenIoT Summit North America will be held on February 21-23, 2017 in Portland, Oregon. Check out over 130 sessions on the Linux kernel, embedded development & systems, and the latest on the open Internet of Things.

Linux.com readers can register now with the discount code, LINUXRD5, for 5% off the attendee registration price. Register now>>