You must set up your Apache web server to use SSL, so that your site URL is https:// and not http://. Sure, there are exceptions, such as test servers and lone LAN servers that only you and your cat use.

But any Internet-accessible web server absolutely needs SSL; there is no downside to encrypting your server traffic, and it’s pretty easy to set up. For LAN servers it may not be as essential; think about who uses it, and how easy it is to sniff LAN traffic.

We’ll learn the easy way how to enable SSL on Apache, and the slightly harder and more authoritative way. Please refer to part 1 of this series, Apache on Ubuntu Linux For Beginners, as this builds on the examples shown there.

The Easy Way

Apache installs with a pair of default encryption certificates: /etc/ssl/certs/ssl-cert-snakeoil.pem and /etc/ssl/private/ssl-cert-snakeoil.key. The following virtual host example modifies our example from part 1.

<VirtualHost *:443>

ServerAdmin carla@localhost

DocumentRoot /var/www/test.com

SSLCertificateFile /etc/ssl/certs/ssl-cert-snakeoil.pem

SSLCertificateKeyFile /etc/ssl/private/ssl-cert-snakeoil.key

ServerName test.com

ServerAlias www.test.com

ErrorLog ${APACHE_LOG_DIR}/error.log

CustomLog ${APACHE_LOG_DIR}/access.log combined

</VirtualHost>

Then enable the Apache SSL module and restart the server:

$ sudo a2enmod ssl

$ sudo service apache2 restart

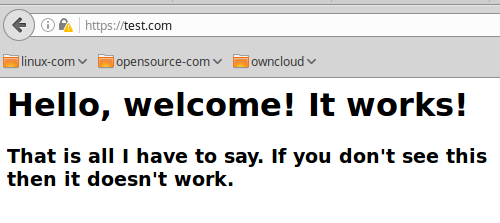

Point your web browser to https://test.com. The first time you do this you’ll get browser paranoia, and warnings how this site is dangerous and will do terrible things to you. Click through all the steps to make a permanent exception for the site. When, at last, you are allowed to actually visit the site you will see something like Figure 1.

Hurrah! Success! It should also work for https://www.test.com, and you’ll have to create an exception for that too. Just for fun click on the little padlock in your browser to read about how your SSL is no good because you’re using a self-signed certificate. Your self-signed certificate is fine, and we’ll discuss this more presently.

Troubleshooting

a2enmod is short for “Apache 2 enable module”. Apache always performs a configuration test at start and restart. If it finds any errors it helpfully tells you how to see what they are:

Job for apache2.service failed because the control process

exited with error code. See "systemctl status apache2.service"

and "journalctl -xe" for details.

So what are you waiting for? Run the two commands to see what’s wrong. This snippet tells me that I forgot to enable the SSL module:

Syntax error on line 4 of /etc/apache2/sites-enabled/test.com.conf:

Invalid command 'SSLCertificateFile', perhaps misspelled or defined

by a module

Action 'configtest' failed.

Another way to test SSL is with openssl s_client, a fabulous tool for testing SSL on servers. It spits out a lot of output, and prints the public encryption certificate. Look for these items at the beginning and the end to indicate a correct setup:

$ openssl s_client -connect test.com:443

CONNECTED(00000003)

depth=0 CN = xubuntu

verify return:1

---

Certificate chain

0 s:/CN=xubuntu

i:/CN=xubuntu

[...]

Start Time: 1476393579

Timeout : 300 (sec)

Verify return code: 0 (ok)

This is what you’ll see when SSL is not enabled:

$ openssl s_client -connect test.com:443

connect: Connection refused

connect:errno=111

Another way to check is with netstat. When SSL is correctly configured and you have a virtual host up, it will listen on port 443:

$ sudo netstat -untap

[...]

tcp 0 0 0.0.0.0:443

Apache’s apachectl -S is a great tool for examining your server configuration and finding any errors. It lists your document root, HTTP user and group, configuration file locations, and active virtual hosts.

Forward Port 80 Connections

When you get your nice SSL and HTTPS setup working, you must automatically forward traffic to your HTTPS address. If site visitors try HTTP they’ll see an error message, and then go away and never visit you again. The best way to do this is by editing your virtual host configuration. For our test.com, add this to the existing virtual host file:

<VirtualHost *:80>

ServerName test.com

ServerAlias www.test.com

DocumentRoot /var/www/test.com

Redirect / https://test.com

</VirtualHost>

Restart Apache, and try both https://test.com and http://test.com. Both should redirect to https://. Refresh your browser to make sure. The Redirect directive defaults to a 302 temporary redirect. Always use this until you have thoroughly tested your configuration, and then you can change it to Redirect permanent.

Using Third-Party SSL Certificates

Managing your own SSL certificate authority and public key infrastructure (PKI) is a royal pain. If you know how to do it, and how to roll out your certificate authorities to your users so they don’t have to battle frightened web browsers, then you are an über guru and I bow to you.

An easier way is to use a trusted third-party certificate authority. These work without freaking out your web browsers because they are already accepted and bundled on your system. Your vendor will have instructions on setting up. See Quieting Scary Web Browser SSL Alerts to learn some ways to tame your SSL madness.

.htaccess

I know, I said I was going to show you how to tame the beastly .htaccess. And I will. Just not today. Soon, I promise you! Until then, this article might be helpful to you: How to Use htaccess to Run Multiple Drupal 7 Websites on Your Cheapo Hosting Account. Sure, it’s about Drupal, but it’s also a good detailed introduction to .htaccess.

Advance your career in Linux System Administration! Check out the Essentials of System Administration course from The Linux Foundation.

The

The