The three cutting-edge frameworks showcased in these talks from MesosCon North America demonstrate the amazing power and flexibility of Apache Mesos for solving large-scale problems.

Perhaps you have noticed, in our Apache Mesos series, the importance of frameworks. Mesos frameworks are the essential glue that make everything work in a Mesos cluster, the layer between Mesos and your applications. They perform a multitude of tasks, including launching and scaling applications, monitoring and health checks, configuration management, and scheduling. In these talks, you’ll learn how:

-

Netflix uses Mesos to power their recommendation engines.

-

Huawei Technologies uses Mesos to make a distributed Redis framework.

-

Crate.IO runs a distributed, scalable, shared-nothing SQL Mesos framework.

Building a Machine-Learning Orchestration Framework on Apache Mesos

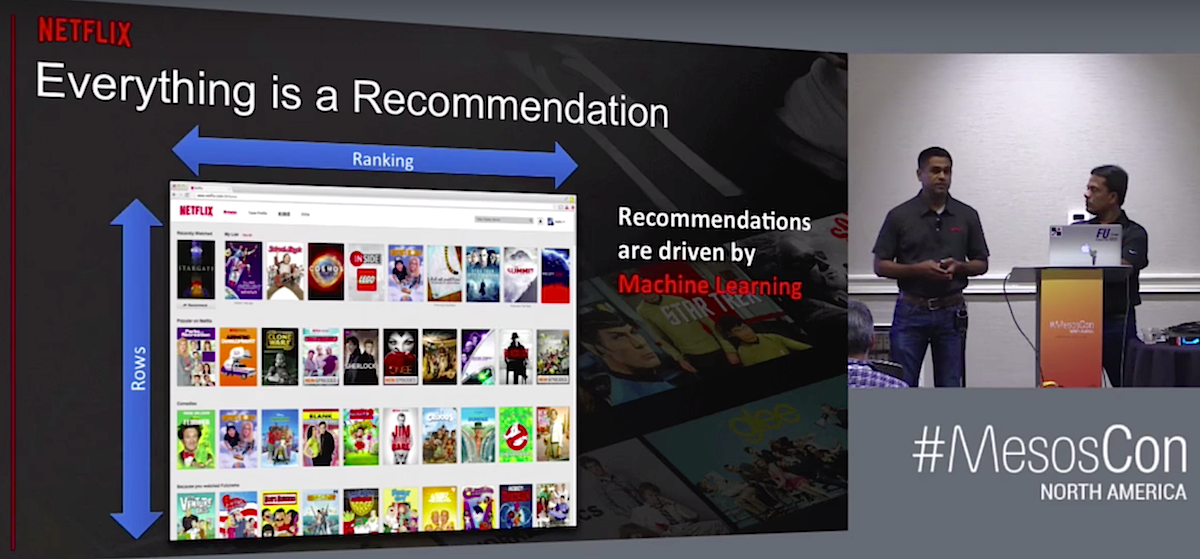

Antony Arokiasamy and Kedar Sadekar, Netflix

Have you ever wondered what powers recommendations on Netflix? It isn’t hordes of employees studying your viewing habits, and it isn’t PigeonRank. Rather, it is a self-learning framework called Meson, which is built on Apache Mesos.

Antony Arokiasamy and Kedar Sadekar lead the personalization infrastructure team at Netflix. They build the infrastructure for the algorithmic teams, who build the machine-learning algorithms that power recommendations at Netflix.

“We want to delight the customer every time you interact with Netflix. We have over 81 million subscribers, and delighting a subscriber or a member means any time you turn on Netflix we want to put forth content to you that we feel you will be really happy to watch…Everything you see once you turn on Netflix is a recommendation. For example, on this page every row is sorted in a particular order which is personalized for that particular user,” Sadekar says.

Netflix is a global business, so they use both global and regional recommendation models, and different tools for different models. “Let’s say certain kinds of movies, certain action movies or martial arts movies carry well in all markets. So let’s train those sets of users using a global model. So for this we want to use Spark. Let’s say in India, you like Bollywood and something else, you like another set of movies, another set of genres. In this one, the technology of choice is R. At the end of it we have a Scala-based thing that’s actually doing the model validation, or choosing the best model that needed to be fit” explains Sadekar.

Meson is flexible and complex, incorporating Hadoop, Docker, Spark, R, Python, and Scala. Watch Arokiasamy and Sadekar’s talk (below) to learn the details of how it all goes together.

Redis on Apache Mesos, a New Framework

Dhilip Kumar S, Huawei Technologies

Redis is a popular key-value store for persistent caching, but running it in a distributed environment is a complex endeavor. Hosting Redis-as-a-service is especially difficult. Dhilip Kumar S of Huawei Technologies shares how he built a thin, high-performing Redis framework on Mesos, which delivers Redis’s good performance and simplifies running it in a cluster.

“One of the most popularly requested middleware is Redis,” says Dhilip, “because it’s absolutely lightweight and it’s easy to create, and almost all Web applications require Redis. We think that the biggest problem down the lane two years as a public cloud provider would be to actually maintain this huge number of Redis instances.”

Dhilip’s team forecast three major Redis problems to solve: customers who need a simple setup with a single Redis binary, high availability with a Redis master and slaves on the same hosts or on different hosts, and 3.0 clusters with multiple Redis masters. They also had to solve the problems of creating, administering, monitoring, clustering, and metering thousands of Redis instances.

Watch the complete presentation to learn how they did it, and to see a live demo of creating multiple Redis instances using Mesos.

Managing Large SQL Database Clusters with the Apache Mesos Crate Framework

Aslan Bakirov and Christian Lutz, Crate.IO

The good news is Mesos makes it possible to perform amazing creative large-scale computing feats, as we have seen previously in this blog series. The bad news is these wonderful technologies are still young and present new challenges, such as horizontally scaling databases. Our old reliable war horses, MySQL, MariaDB, and PostgreSQL can’t do that. Christian Lutz, CEO of Crate.IO, built the Crate distributed, highly-scalable, highly-available, shared-nothing SQL database to meet modern challenges.

Aslan Bakirov describes the features that make Crate the best SQL database for Mesos. “Crate has a shared-nothing architecture, which means that nodes in the Crate cluster do not share any states, so that if any node in your cluster fails, the other nodes will not be affected from that. The second main feature is all nodes are equal. Every node can be treated as a master node whenever it’s needed, so if you lose any of your master nodes, one of your slave node can become a master node and expose the cluster state to other nodes in the Crate cluster. The third main feature of the shared-nothing architecture is each node in a Crate cluster can perform any type of a query on every node in the Crate cluster.”

The Crate Mesos Framework integrates the data storage layer with Mesos.

Watch the full presentation to learn more, and to see a live demo.

Mesos Large-Scale Solutions

Please enjoy the previous blogs in this series to see some of the ingenious and creative ways to hack Mesos for large-scale tasks.

-

4 Unique Ways Uber, Twitter, PayPal, and Hubspot Use Apache Mesos

-

How Verizon Labs Built a 600 Node Bare Metal Mesos Cluster in Two Weeks

-

Running Distributed Applications at Scale on Mesos from Twitter and CloudBees

-

Apache Spark Creator Matei Zaharia Describes Structured Streaming in Spark 2.0

-

Open Source Is Key to the Modern Data Center, Says EMC’s Joshua Bernstein

-

Apache Mesos for Beginners: 3 Videos to Help You Get Started

Apache, Apache Mesos, and Mesos are either registered trademarks or trademarks of the Apache Software Foundation (ASF) in the United States and/or other countries. MesosCon is run in partnership with the ASF.