If your network is fine, read no further.

TROUBLE IN PARADISE

But it’s likely you’re still reading. Because things are not exactly fine. Because you are probably like 99.999% of us who are experiencing crazy changes in our networks. Traffic in metro networks is exploding, characterized by many nodes, with varying traffic flows and a wide mix of services and bit rates.

To cope with all this traffic growth and changing usage patterns, WAN and metro networks require more flexibility. Network resources that can be dynamically and easily set into logical zones; the ability to create new services out of pools of network resources.

Virtualization is a concept that has achieved this in data centers where compute resources have long been virtualized using virtual machines (VMs). NICs providing network connectivity to VMs have been virtualized. But network resources are still rigidly and physically assigned in metro and WAN networks.

You, the devoted architects and tireless operators of these high capacity networks, are confronted with complex networking structures that don’t lend themselves to any form of dynamic change. A further dilemma is that you need to both manage existing connections but also build new platforms that excel at delivering on-demand services and subscriber-level networking.

VAULTING TO THE FRONT

The IXPs and ISPs who architect networks with dynamic programmatic control will achieve service velocity that is winning.

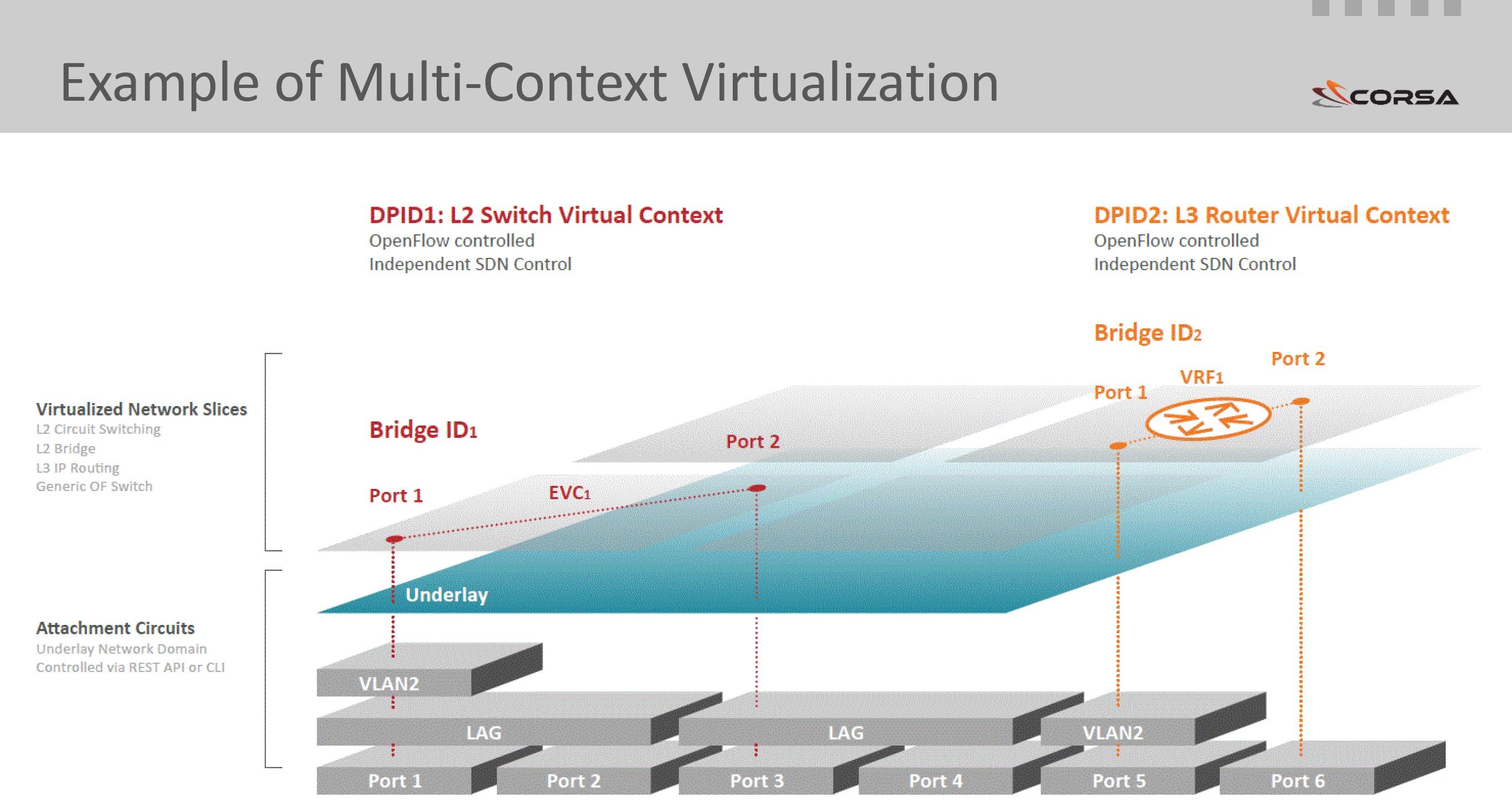

Enter true network hardware virtualization which creates virtual forwarding contexts (VFCs) at WAN scale. For ISPs, Internet Exchanges (IX) and Large Campus networks, WAN-scale multi-context virtualization offers dynamic creation of logical forwarding contexts within a physical switch to make programmable network resource allocation possible.

VIRTUALIZATION IN NETWORK HARDWARE FOR WAN AND METRO NETWORKS

Corsa’s SDN data planes allow hardware resources to be exposed as independent logical SDN Virtual Forwarding Contexts (VFCs) running at 10G and 100G physical network speed. Under SDN application control, VFCs are created in the logical overlay. Three context types are fully optimized for production network applications: L2 Bridge, L3 IP Routing, L2 Circuit Switching. A Generic OpenFlow Switch context type is provided for advanced networking applications where the user wants to use OpenFlow to define any forwarding logic.

Each packet entering the hardware is processed with full awareness of which VFC it belongs to.

Each VFC is assigned its own dedicated hardware resources that are independent of other VFCs and cannot be affected by other VFCs scavenging. Each VFC can be controlled by its own, separate SDN application.

The physical ports of the underlay are abstracted from the logical interfaces of the overlay. The logical interfaces defined for each VFC correspond to a physical port or an encapsulated tunnel, such as VLAN, MPLS pseudo wire, GRE tunnel, or VXLAN tunnel, in the underlay. Logical interfaces of any VFC can be shaped to their own required bandwidth.

USE SDN VIRTUALIZATION TO AUGMENT YOUR NETWORK WHERE IT’S NEEDED

This level of hardware virtualization, coupled with advanced traffic engineering and management, allows building traditional Layer 2 and Layer 3 services with new innovative SDN enabled capabilities. For example, an SDN enabled Layer 2 VPLS service can provide SDN enabled features such as Bandwidth on Demand, or application controlled forwarding rules, and at the same time use existing network infrastructure in the underlay (physical) network to provide connectivity for the new service. To further differentiate, service providers may even allow customers to bring their own SDN controllers to control their services, while retaining full control over the underlay network.

STOP READING AND START DOING!

With Corsa true network hardware virtualization, virtual switching and routing can be achieved at scale to enable programmable, on-demand services for operators and their customers.

WEBINAR

Join us on the live webinar at 10am PDT on June 22 to see how networks can be built using true network hardware virtualization and learn the specific uses cases that it benefits. This webinar will outline specific attributes of open, programmable SDN switching and routing platforms that are needed, especially at scale and in the process dispel the notion of ‘the controller’, discussing how open SDN applications can be used to control the virtualized instances.