The technology landscape is changing very fast. We now carry devices in our pockets that are more powerful than PCs we had some 20 years ago. This means we are now continuously churning huge amounts of data that travels between machines — data centers (aka cloud) and our mobile devices. The cloud/data center as we know it today is destined to change, too, and this evolution is changing market dynamics.

As Peter Levine, general partner at Andreessen Horowitz said during Open Networking Summit (ONS), cloud computing is not going to be here forever. It will change, it will morph, and he believes that the industry will go from centralized to distributed and then back to centralized. He said that the cloud computing model would disaggregate in the not too distant future back to a world of distributed computing.

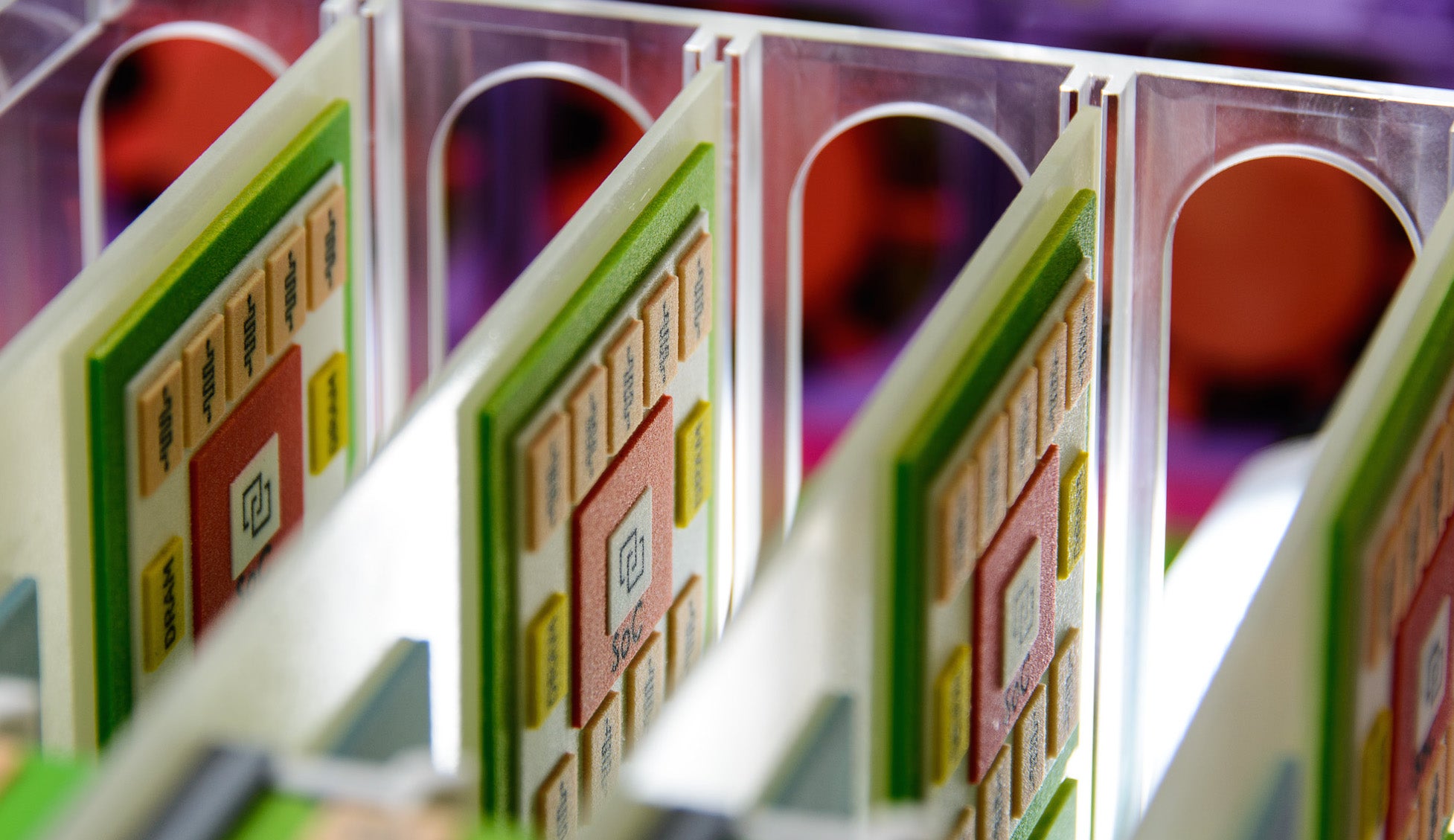

That metamorphosis means we will need a new class of computing devices, and we may need a new approach toward computers. That is something Hewlett Packard Enterprise (HPE) has been working on. Back in 2014, HPE introduced the concept of The Machine, which took a unique approach by using memristors.

The Machine page of HPE explained, “The Machine puts the data first. Instead of processors, we put memory at the core of what we call “Memory-Driven Computing.” Memory-Driven Computing collapses the memory and storage into one vast pool of memory called universal memory. To connect the memory and processing power, we’re using advanced photonic fabric. Using light instead of electricity is key to rapidly accessing any part of the massive memory pool while using much less energy.”

Open Source at the Core

Yesterday, HPE announced that it’s bringing The Machine to the open source world. HPE is inviting the open source community to collaborate on HPE’s largest and most notable research project, which is focused on reinventing the computer architecture on which all computers have been built for the past 60 years.

Bdale Garbee, Linux veteran and HPE Fellow, Office of the CTO at HPE, and a member of The Linux Foundation advisory board, told me in an interview that what’s really incredible about the announcement is that this is the first time a company has gone fully open source with really revolutionary technologies that have the potential to change the world.

“As someone who has been an open source guy for a really long time I am immensely excited. It represents a really different way of thinking about engagement with the open source world much earlier in the life cycle of a corporate research and development initiative than anything I have ever been near in the past,” said Garbee.

The Machine is a major shift from what we know of computing. Which also means a totally different approach from a software perspective. Although such a transformation is not new; we have witnessed many such transitions, most notably the shift from spinning disks of hard drives to solid state storage.

“What The Machine does with the intersection of very large low-cost, low-powered, fast non-volatile memory and chip level photonics is it allows us to be thinking in terms of the storage in a very memory driven computing model,” said Garbee.

HPE is doing a lot of research internally, but they also want to engage the larger open source community to get involved at a very early stage to find solutions to new problems.

Garbee said that going open source at an early stage allows people to figure out what the differences are in a Memory-Driven Computing model, with a large fabric-attached storage array talking to a potentially heterogeneous processing elements over a photonically interconnected fabric.

HPE will release more and more code in open source for community engagement. In conjunction with this announcement, HPE has made a few developer tools available on GitHub. “So one of the things that we are releasing is the Fabric Attached Memory Emulation toolkit that allows users to explore the new architectural paradigm. There are some tools for emulating the performance of system that are built around this kind of fabric attached memory,” said Garbee.

The other three tools include:

-

Fast optimistic engine for data unification services: A completely new database engine that speeds up applications by taking advantage of a large number of CPU cores and non-volatile memory (NVM)

-

Fault-tolerant programming model for non-volatile memory: Adapts existing multi-threaded code to store and use data directly in persistent memory. Provides simple, efficient fault-tolerance in the event of power failures or program crashes.

-

Performance emulation for non-volatile memory bandwidth: A DRAM-based performance emulation platform that leverages features available in commodity hardware to emulate different latency and bandwidth characteristics of future byte-addressable NVM technologies

HPE said in a statement that these tools enable existing communities to capitalize on how Memory-Driven Computing is leading to breakthroughs in machine learning, graph analytics, event processing, and correlation.

Linux First

Garbee told me that “Linux is the primary operating system that we are targeting with The Machine.”

Garbee recalled that HPE CTO Martin Fink made a fundamental decision a couple of years ago to open up the research agenda and to talk very publicly about what HP was trying to accomplish with the various research initiatives that would come together to form The Machine. The company’s announcement solidifies that commitment to open source.

Fink’s approach towards opening up should not surprise anyone. Fink was the first vice president of Linux and open source at HPE. That was also the time when Garbee served as the open source and Linux chief technologist. Garbee said that Fink very well understands the concept of collaborative development and maintenance of the software that comes from the open source world. “He and I have a long history of engagement, and we ended up influencing each other’s strategic thinking,” said Garbee.

HPE has a team inside of the company that is working very hard on enabling the various technology elements of The Machine. They are also actively engaging with the Linux kernel community working on key areas of development that are needed to support The Machine. “We need better support for large nonvolatile memories that look more like memory and less like storage devices. There will be a number of things coming out as we move forward in the process of enabling The Machine hardware,” said Garbee.

Beyond Linux

Another interesting area for The Machine, beyond Linux, is the database. The Machine is a transition from things that look like storage devices, (file system on rotating media) to something that look like directly accessible memory. That memory can be mapped in blocks as big as processes and instruction sets architectures. Now this transition needs a new way of thinking about access to data, to figure out where the bottlenecks are.

“One of our research initiatives has been around developing a faster optimized engine for data unification that ends up looking like a particular kind of database, and we are very interested in having instant feedback from the open source community,” said Garbee. As we start to bring these new capabilities to the marketplace with The Machine, there is an opportunity to again rethink exactly how this stuff should work, he said.

HPE teams have been working with existing database and big database communities. “However, there are some specific chunks of code that have come from something closer to pure research and we will releasing that work to have a look and work out what the next steps are going to be,” said Garbee.

The Machine is to date Hewlett Packard Labs’ biggest project, but even bigger than that is HPE’s decision to make it open and powered by Linux. “It’s a change in behavior from anything that I can recall happening in this company before,” said Garbee.