Last week, you may recall, we looked at calculating network addresses with ipcalc. Now, dear friends, it is my pleasure to introduce you to ipv6calc, the excellent IPv6 address manipulator and query tool by Dr. Peter Bieringer. ipv6calc is a little thing; on Ubuntu /usr/bin/ipv6calc is about 2MB, yet it packs in a ton of functionality.

Here are some of ipv6calc’s features:

- IPv4 assignment databases (ARIN, IANA, APNIC, etc.)

- IPv6 assignment databases

- Address and logfile anonymization

- Compression and expansion of addresses

- Query addresses for geolocation, registrar, address type

- Multiple input and output formats

It includes multiple commands. We’re looking at the ipv6calc command in this article. It also includes ipv6calcweb and mod_ipv6calc for websites, ipv6logconv log converter, and ipv6logstats log statistics generator.

If your Linux distribution doesn’t compile all options, it’s easy to build it yourself by following instructions on The ipv6calc Homepage.

One useful feature it does not include is a subnet calculator. We’ll cover this in a future article.

Run ipv6calc -vv to see a complete features listing. Refer to man ipv6calc and The ipv6calc Homepage to learn all the command options.

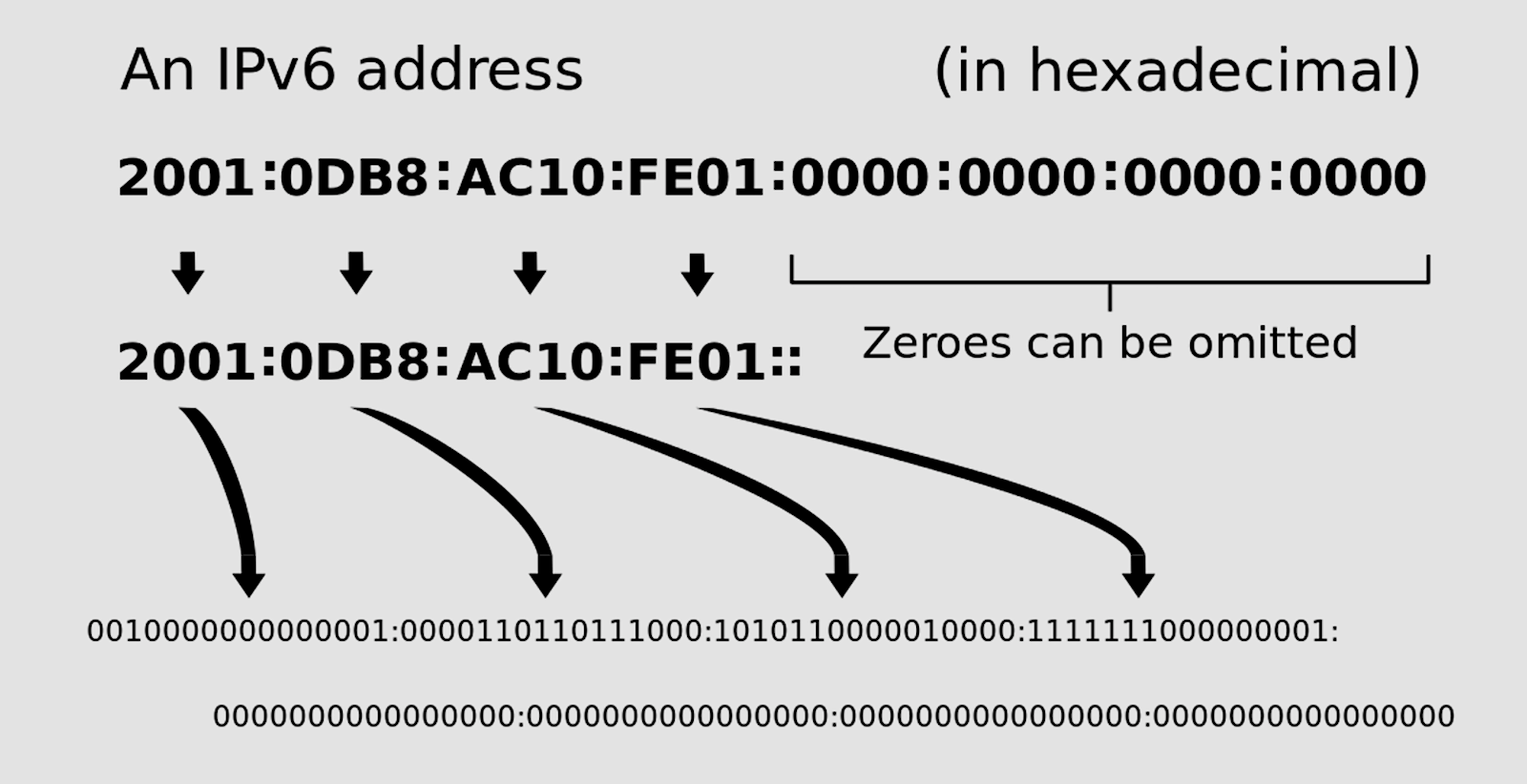

Compression and Decompression

Remember how we can compress those long IPv6 addresses by condensing the zeroes? ipv6calc does this for you:

$ ipv6calc --addr2compaddr 2001:0db8:0000:0000:0000:0000:0000:0001

2001:db8::1

You might recall from Practical Networking for Linux Admins: Real IPv6 that the 2001:0DB8::/32 block is reserved for documentation and testing. You can uncompress IPv6 addresses:

$ ipv6calc --addr2uncompaddr 2001:db8::1

2001:db8:0:0:0:0:0:1

Uncompress it completely with the --addr2fulluncompaddr option:

$ ipv6calc --addr2fulluncompaddr 2001:db8::1

2001:0db8:0000:0000:0000:0000:0000:0001

Anonymizing Addresses

Anonymize any address this way:

$ ipv6calc --action anonymize 2001:db8::1

No input type specified, try autodetection...found type: ipv6addr

No output type specified, try autodetection...found type: ipv6addr

2001:db8::9:a929:4291:c02d:5d15

If you get tired of “no input type” messages, you can specify the input and output types:

$ ipv6calc --in ipv6addr --out ipv6addr --action anonymize 2001:db8::1

2001:db8::9:a929:4291:c02d:5d15

Or use the “quiet” option to suppress the messages:

$ ipv6calc -q --action anonymize 2001:db8::1

2001:db8::9:a929:4291:c02d:5d15

Getting Information

What with all the different address classes and sheer size of IPv6 addresses, it’s nice to have ipv6calc tell you all about a particular address:

$ ipv6calc -qi 2001:db8::1

Address type: unicast, global-unicast, productive, iid, iid-local

Registry for address: reserved(RFC3849#4)

Address type has SLA: 0000

Interface identifier: 0000:0000:0000:0001

Interface identifier is probably manual set

$ ipv6calc -qi fe80::b07:5c7e:2e69:9d41

Address type: unicast, link-local, iid, iid-global, iid-eui64

Registry for address: reserved(RFC4291#2.5.6)

Interface identifier: 0b07:5c7e:2e69:9d41

EUI-64 identifier: 09:07:5c:7e:2e:69:9d:41

EUI-64 identifier is a global unique one

One of these days, I must write up a glossary of all of these crazy terms, like EUI-64 identifier. This means Extended Unique Identifier (EUI), defined in RFC 2373. This still doesn’t tell us much, does it? EUI-64 addresses are the link local IPv6 addresses, for stateless auto-configuration. Note how ipv6calc helpfully provides the relevant RFCs.

This example queries Google’s public DNS IPv6 address, showing information from the ARIN registry:

$ ipv6calc -qi 2001:4860:4860::8844

Address type: unicast, global-unicast, productive, iid, iid-local

Country Code: US

Registry for address: ARIN

Address type has SLA: 0000

Interface identifier: 0000:0000:0000:8844

Interface identifier is probably manual set

GeoIP country name and code: United States (US)

GeoIP database: GEO-106FREE 20160408 Bu

Built-In database: IPv6-REG:AFRINIC/20150904 APNIC/20150904 ARIN/20150904

IANA/20150810 LACNIC/20150904 RIPENCC/20150904

You can filter these queries in various ways:

$ ipv6calc -qi --mrmt GEOIP 2001:4860:4860::8844

GEOIP_COUNTRY_SHORT=US

GEOIP_COUNTRY_LONG=United States

GEOIP_DATABASE_INFO=GEO-106FREE 20160408 Bu

$ ipv6calc -qi --mrmt IPV6_COUNTRYCODE 2001:4860:4860::8844

IPV6_COUNTRYCODE=US

Run ipv6calc -vh to see a list of feature tokens and which ones are installed.

DNS PTR Records

Now we’ll use Red Hat in our examples. To find the IPv6 address of a site, you can use good old dig to query the AAAA records:

$ dig AAAA www.redhat.com

[...]

;; ANSWER SECTION:

e3396.dscx.akamaiedge.net. 20 IN AAAA 2600:1409:a:3a2::d44

e3396.dscx.akamaiedge.net. 20 IN AAAA 2600:1409:a:397::d44

And now you can run a reverse lookup:

$ dig -x 2600:1409:a:3a2::d44 +short

g2600-1409-r-4.4.d.0.0.0.0.0.0.0.0.0.0.0.0.0.2.a.3.0.a.

0.0.0.deploy.static.akamaitechnologies.com.

g2600-1409-000a-r-4.4.d.0.0.0.0.0.0.0.0.0.0.0.0.0.2.a.

3.0.deploy.static.akamaitechnologies.com.

As you can see, DNS is quite complex these days thanks to cloud technologies, load balancing, and all those newfangled tools that datacenters use.

There are many ways to create those crazy long PTR strings for your own DNS records. ipv6calc will do it for you:

$ ipv6calc -q --out revnibbles.arpa 2600:1409:a:3a2::d44

4.4.d.0.0.0.0.0.0.0.0.0.0.0.0.0.2.a.3.0.a.0.0.0.9.0.4.1.0.0.6.2.ip6.arpa.

If you want to dig deeper into IPv6, try reading the RFCs. Yes, they can be dry, but they are authoritative. I recommend starting with RFC 8200, Internet Protocol, Version 6 (IPv6) Specification.

Learn more about Linux through the free “Introduction to Linux” course from The Linux Foundation and edX.