When last we met, we reviewed essential TCP/IP basics for Linux admins in Practical Networking for Linux Admins: TCP/IP. Here, we will review network and host addressing and find out whatever happened to IPv6?

IPv4 Ran Out Already

Once upon a time, alarms were sounding everywhere: We are running out of IPv4 addresses! Run in circles, scream and shout! So, what happened? We ran out. IPv4 Address Status at ARIN says “ARIN’s free pool of IPv4 address space was depleted on 24 September 2015. As a result, we no longer can fulfill requests for IPv4 addresses unless you meet certain policy requirements…” Most of us get our IPv4 addresses from our Internet service providers (ISPs), so our ISPs are duking it out for new address blocks.

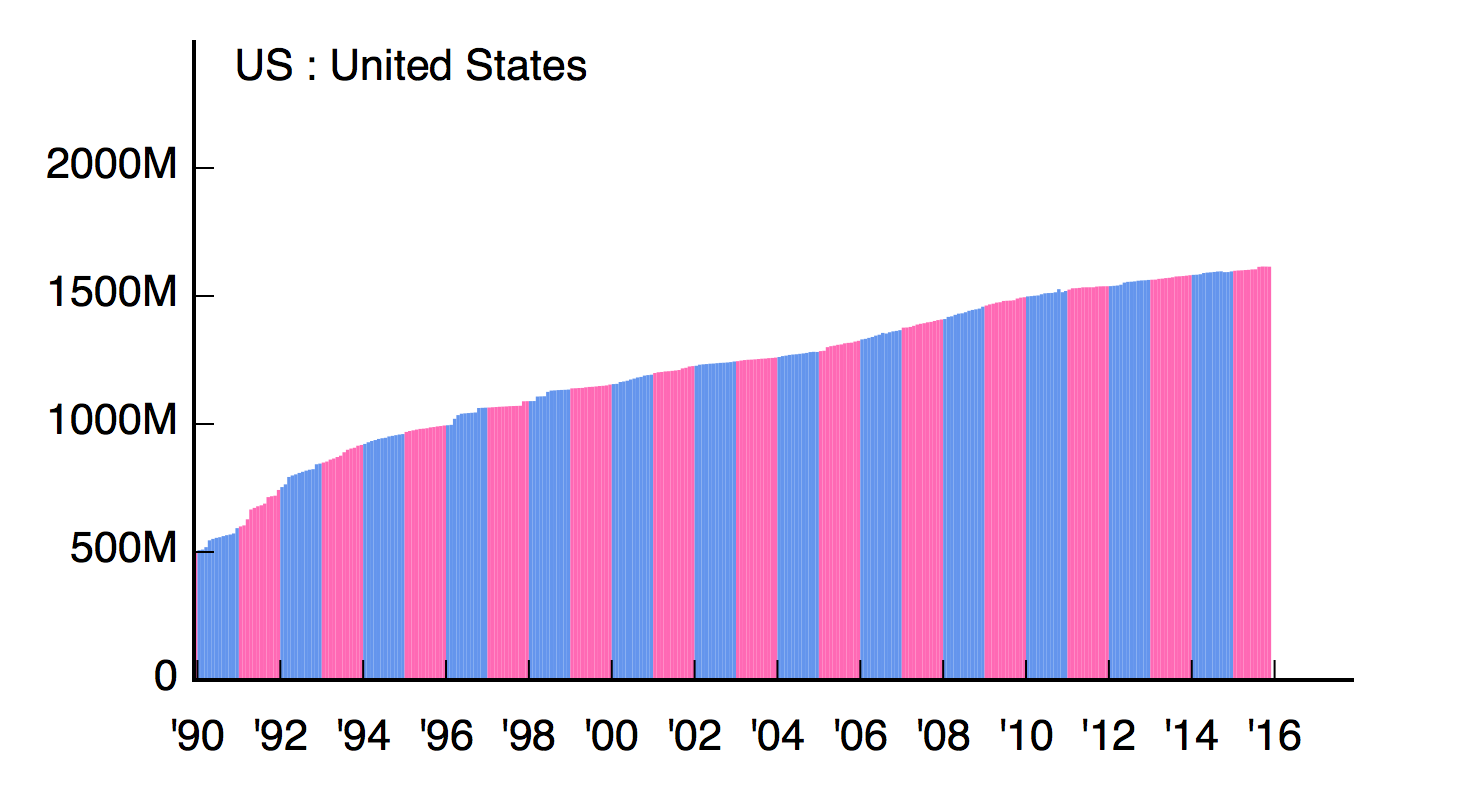

What do we do about it? Start with bitter laughter, because service providers and device manufacturers are still not well-prepared, and IPv6 support is incomplete despite having more than a decade to implement it. This is not surprising, given how many businesses think computing is like office furniture: buy it once and use it forever (except, of course, for the executive team, who get all the shiny new doodads while us worker bees get stuck with leftovers). Google, who sees all and mines all, has some interesting graphs on IPv6 adoption. Overall adoption is about 18 percent, with the United States at 34 percent and Belgium leading at 48 percent.

What can we Linux nerds do about this? Linux, of course, has had IPv6 support for ages. The first stop is your ISP; visit Test IPv6 to learn their level of IPv6 support. If they are IPv6-ready, they will assign you a block of addresses, and then you can spend many fun hours roaming the Internet in search of sites that can be reached over IPv6.

IPv6 Addressing

IPv6 addresses are 128-bit, which means we have a pool of 2^128 addresses to use. That is 340,282,366,920,938,463,463,374,607,431,768,211,456, or 340 undecillion, 282 decillion, 366 nonillion, 920 octillion, 938 septillion, 463 sextillion, 463 quintillion, 374 quadrillion, 607 trillion, 431 billion, 768 million, 211 thousand and 456 addresses. Which should be just about enough for the Internet of Insecure Intrusive Gratuitously Connected Things.

In contrast, 32-bit IPv4 supplies 2^32 addresses, or just under 4.3 billion. Network address translation (NAT) is the only thing that has kept IPv4 alive this long. NAT is why most home and small businesses get by with one public IPv4 address serving large private LANs. NAT forwards and rewrites your LAN addresses so that lonely public address can serve multitudes of hosts in private address spaces. It’s a clever hack, but it adds complexity to firewall rules and services, and in my not-quite-humble opinion that ingenuity would have been better invested in moving forward instead of clinging to inadequate legacies. Of course, that’s a social problem rather than a technical problem, and social problems are the most challenging.

IPv6 addresses are long at 8 hexadecimal octets. This is the loopback address, 127.0.0.1, in IPv6:

0000:0000:0000:0000:0000:0000:0000:0001

Fortunately, there are shortcuts. Any quad of zeroes can be condensed into a single zero, like this:

0:0:0:0:0:0:0:1

You can shorten this even further, as any unbroken sequence of consecutive zeros can be replaced with a pair of colons, so the loopback address becomes:

::1

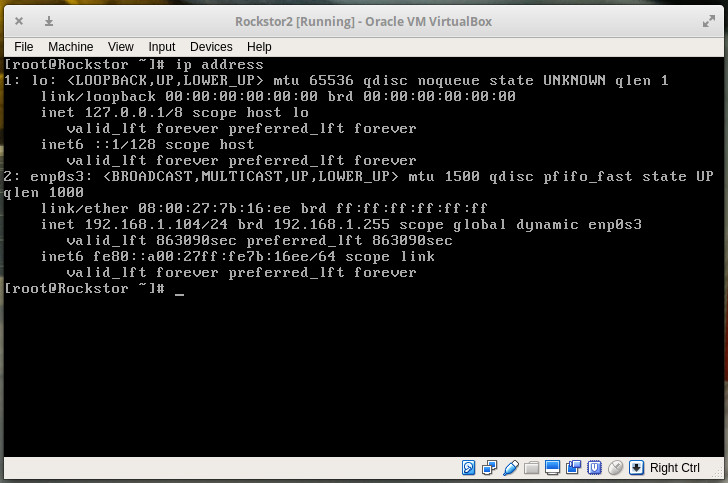

Which you can see on your faithful Linux system with ifconfig:

$ ifconfig lo

lo Link encap:Local Loopback

inet addr:127.0.0.1 Mask:255.0.0.0

inet6 addr: ::1/128 Scope:Host

I know, we’re supposed to use the ip command because ifconfig is deprecated. When ip formats its output as readably as ifconfig then I will consider it.

Be Quiet and Drink Your CIDR

Classless Inter-Domain Routing (CIDR) defines how many addresses are in a network block. For the loopback address, ::1/128, that is a single address because it uses all 128 bits. CIDR notation is described as a prefix, which is confusing because it looks like a suffix. But it really is a prefix, because it tells you the bit length of a common prefix of bits, which defines a single block of addresses. Then you have a subnet, and finally the host portion of the address. 2001:0db8::/64 expands to this:

2001:db8:0000:0000:0000:0000:0000:0000

_____________|____|___________________

network ID subnet interface address

When your ISP gives you a block of addresses, they control the network ID and you control the rest. This example gives you 18,446,744,073,709,551,616 individual addresses and 65,536 subnets. Mediawiki has a great page with charts that explains all of this, and how allocations are managed, at Range blocks/IPv6

2000::/3 is the global unicast range, or public routable addresses. Do not use these for experimentation without blocking them from leaving your LAN. Better yet, don’t use them and move on to the next paragraph.

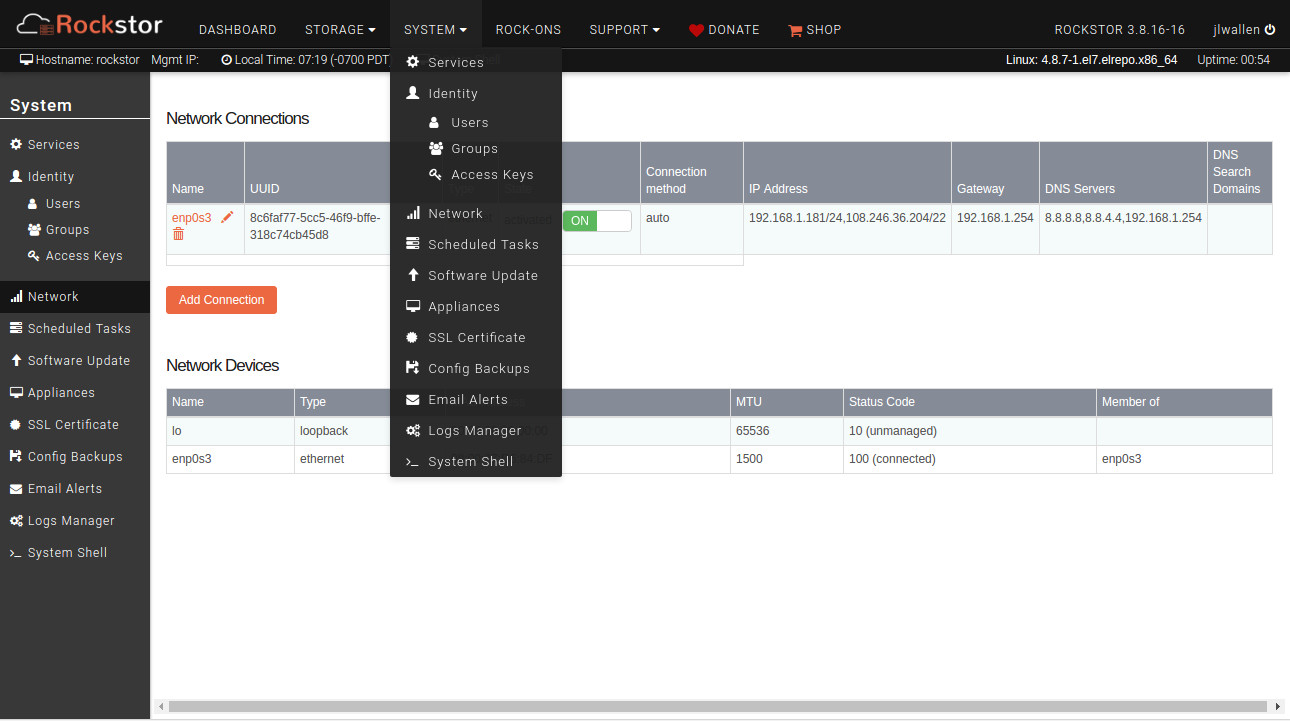

The 2001:0DB8::/32 block is reserved for documentation and examples, so use these for testing. This example assigns the first available address to interface enp0s25, which is what is what Ubuntu calls my eth0 interface:

# ip -6 addr add 2001:0db8::1/64 dev enp0s25

$ ifconfig enp0s25

enp0s25 Link encap:Ethernet HWaddr d0:50:99:82:e7:2b

inet6 addr: 2001:db8::1/64 Scope:Global

Increment up from :1 in hexadecimal: 0, 1, 2, 3, 4, 5, 6, 7, 8, 9, a, b, c, d, e, f, 10, 11, 12, 13, 14, 15, 16, 17, 18, 19, 1a, 1b, and so on.

You can add as many addresses as you like to a single interface. You can ping them from the host they’re on, but not from other hosts on your LAN because you need a router. Next week, we’ll set up routing.

IPcalc

All of these fine hexadecimal addresses are converted from binary. Where does the binary come from? The breath of angels. Or maybe the tears of unicorns, I forget. At any rate, you’re welcome to work these out the hard way, or install ipcalc on your Linux machine, or use any of the nice web-based IP calculators. Don’t be too proud to use these because they’re lifesavers, especially for routing, as we’ll see next week.

Learn more about Linux through the free “Introduction to Linux” course from The Linux Foundation and edX.