NoSQL databases are one of those fun topics where people get all excited because it’s cool and new. But, they’re really not new, they’re different from SQL databases, and they have different use cases. NoSQL is not going to make world peace or give you your own private holodeck but, aside from those deficiencies, it is pretty cool.

What is NoSQL?

NoSQL is a fine multi-purpose term with multiple meanings, thanks to the incurable nerd habit of modifying everything and never being finished. NoSQL means non-SQL, non-relational, distributed, scalable, and not-only-SQL, because many support SQL-type queries. NoSQL-type databases have been around since the olden Unix days, although naturally some people act like they’re a brand-new awesome sauce Web 2.0 thingy. I don’t even know what Web 2.0 is, although I like it for not having blink tags. At any rate, NoSQL and SQL databases have some things in common, and some differences. NoSQL is not a replacement for SQL, and it is not better or worse, but is made for different tasks. The distinction between the two is blurring as they both evolve, and perhaps someday will not be very meaningful.

Traditional RDBMS

Some examples of traditional relational database management systems are MySQL, MariaDB, and PostgreSQL. We know and love these old-time RDBMS because they’re solid and reliable, are proven for ensuring data integrity, and we’re used to them.

But our beloved old-timers don’t fit well into our modern world of fabulously complex and high-speed datacenters. They have formal schema defining tables and field types, and may also have indexes, primary keys, triggers, and stored procedures. You have to start with designing your schema before you can start using your DB. Because of this rigid structure adding a new data type is a significant operation. They don’t scale, cluster, or replicate very easily, so while you can use them for your lovely cloud or distributed datacenter, many NoSQL DBs are designed for the fast-paced anarchic world of cloud and distributed computing.

NoSQL DBs also scale down nicely. When you don’t want all the complexity and overhead of MariaDB or PostgreSQL, or want an embedded DB, try CouchbaseLite, TinyDB, or good old Berkeley DB.

NoSQL Types

NoSQL DBs have flexible schema, which is both a lovely feature and a pitfall. It is easier than wrangling inflexible tables, although it doesn’t mean you don’t have to care about schema, because you do. Sensible design is always better than chaos.

There are over 200 NoSQL-type databases, and these fall into some general categories according to their data models.

Key:Value store uses a hash table of keys/value pairs.

Document-based store uses actual text documents.

Column-based store organizes your data in columns, and each storage block contains data from only one column.

Graph-based DBs are very fast at querying and retrieving diverse data.

Document store DBs include CouchDB, MongoDB, and SequoiaDB. Instead of tables, these use JSON-like field-value pair documents, actual text documents that you type and look at, and documents can be organized into collections. These examples show a little bit about how MongoDB organizes data.

{

title: "MongoDB: The Definitive Guide",

author: [ "Kristina Chodorow", "Mike Dirolf" ],

published_date: ISODate("2010-09-24"),

pages: 216,

language: "English",

publisher: {

name: "O'Reilly Media",

founded: 1980,

location: "CA"

}

}

There is not a rigid number of fields; you could omit any of the fields in the example, or add more. The publisher information is an embedded sub-document. If the publisher information is going to be re-used a lot, use a reference to make it available to multiple entries.

{

_id: "oreilly",

name: "O'Reilly Media",

founded: 1980,

location: "CA"

}

{

_id: 123456789,

title: "MongoDB: The Definitive Guide",

author: [ "Kristina Chodorow", "Mike Dirolf" ],

published_date: ISODate("2010-09-24"),

pages: 216,

language: "English",

publisher_id: "oreilly"

}

Key:value NoSQLs include Redis, Riak, and our old friend Berkeley DB, which has been around since forever. Berkeley DB is the default back end for the Cyrus IMAP server, Exim, Evolution mail client, OpenLDAP, and Bogofilter.

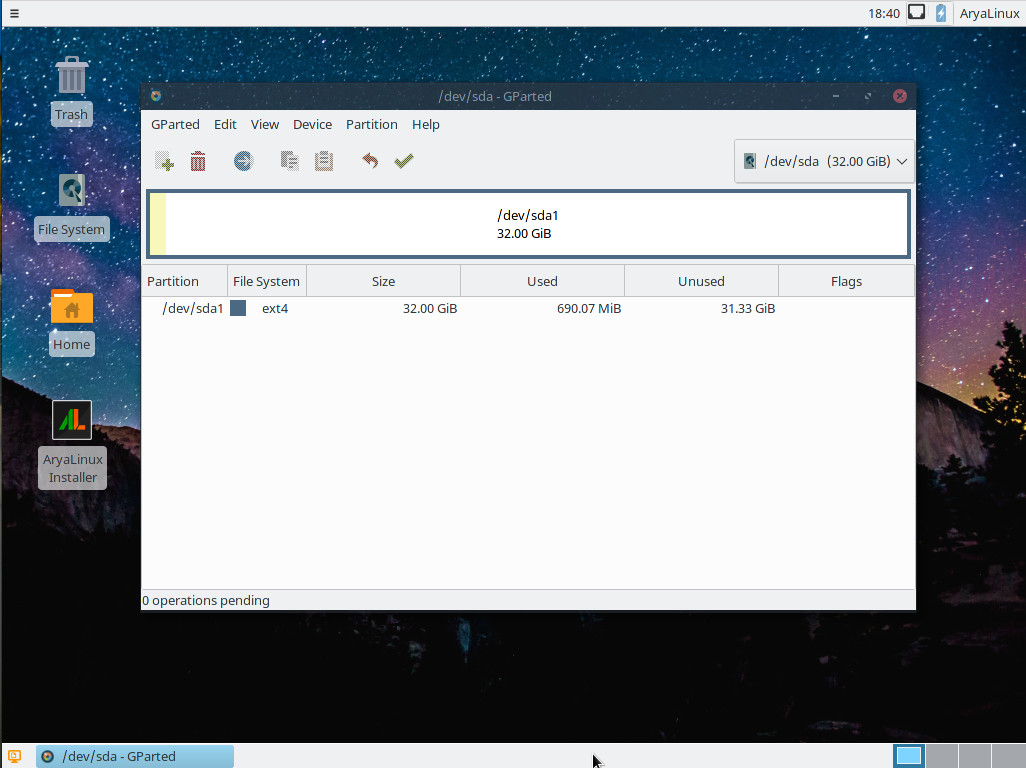

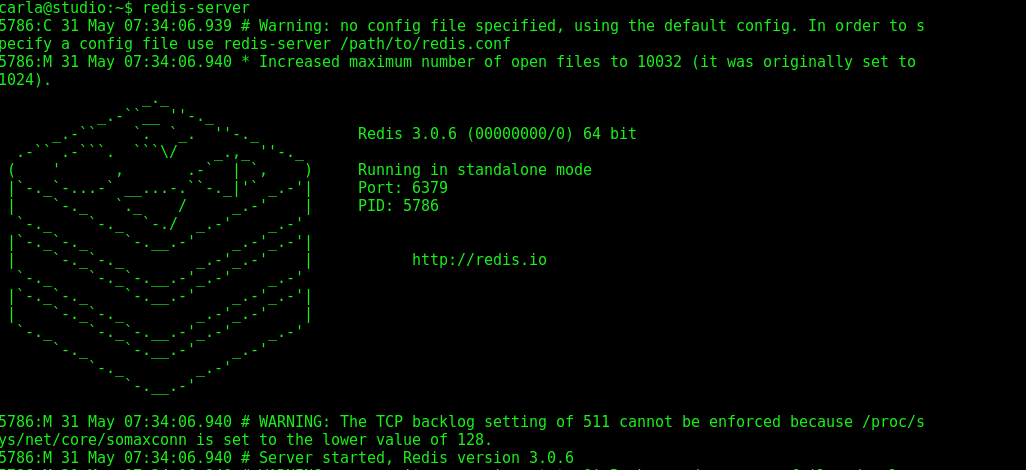

Redis also represents another type of NoSQL database, in-memory. It is very fast because it defaults to running in memory only, with a configurable write-to-disk option (which, of course, is much slower). Redis is replacing Memcached as a distributed memory caching object system on dynamic web sites. If running in memory sounds scary, consider the use case: dynamic web sites delivering all kinds of transient data. If Redis makes the user click an extra time, no biggie. Start it by running redis-server; this is how it looks after installation:

$ redis-server

5786:C 30 May 07:34:06.939 # Warning: no config file specified, using

the default config. In order to specify a config file use redis-server

/path/to/redis.conf

5786:M 30 May 07:34:06.940 * Increased maximum number of open files

to 10032 (it was originally set to 1024).

_._

_.-``__ ''-._

_.-`` `. `_. ''-._ Redis 3.0.6 (00000000/0) 64 bit

.-`` .-```. ```/ _.,_ ''-._

( ' , .-` | `, ) Running in standalone mode

|`-._`-...-` __...-.``-._|'` _.-'| Port: 6379

| `-._ `._ / _.-' | PID: 5786

`-._ `-._ `-./ _.-' _.-'

|`-._`-._ `-.__.-' _.-'_.-'|

| `-._`-._ _.-'_.-' | http://redis.io

`-._ `-._`-.__.-'_.-' _.-'

|`-._`-._ `-.__.-' _.-'_.-'|

| `-._`-._ _.-'_.-' |

`-._ `-._`-.__.-'_.-' _.-'

`-._ `-.__.-' _.-'

`-._ _.-'

`-.__.-'

Open a second terminal and run redis-cli. Commands in bold are what you type.

$ redis-cli

127.0.0.1:6379> SET my:name "carla schroder"

OK

127.0.0.1:6379> GET my:name

"carla schroder"

127.0.0.1:6379>

Type exit to quit. This is more like using a traditional RDBMS, because everything is done with commands and you don’t have nice text documents to look at.

Column Store Databases include Cassandra and Apache HBase. These store your data in a column format. Why does this matter, you ask? It makes queries and data aggregation easier. With document store and in-memory DBs you have to rely on good program logic to run queries and manipulate your data. Column-store DBs simplify your program logic.

When you see those “You may also be interested in…” links on a web site, they are most likely delivered from a graph-based DB. Graph-based DBs do not use SQL because it is too rigid for such fluid queries. There is not yet a standard query language, so each one is its own special flower. Some examples are InfiniteGraph, OrientDB, and Neo4J.

So, there you have it, another plethora of choices in an already-overloaded tech world. In a nutshell, NoSQL DBs are great for fast prototyping of new schema, raw speed, and scalability. If you want the most data integrity, such as for financial transactions, stick with RDBMS. Though, again, as the two evolve we’re going to see less-clear boundaries and perhaps someday One DB to Rule Them All.

Learn more about Linux through the free “Introduction to Linux” course from The Linux Foundation and edX.