Written by Varad Gautam, Consultant Associate Software Engineer at Collabora.

Over the past few weeks, I have been working for Collabora on plumbing DRM format modifier support across a number of components in the graphics stack. This post documents the work and the related consequences/implications.

The need for modifiers

Until recently, the FourCC format code of a buffer provided a nearly comprehensive description of its contents. But as hardware design has advanced, placing the buffers in a non-traditional (nonlinear) fashion has become more important in order to gain performance. A given buffer layout can prove to be more suited for particular use-cases than others, such as tiled formats to improve locality of access and enable fast local buffering.

Alternatively, a buffer may hold compressed data that requires external metadata to decompress before the buffer is used. Just using the FourCC format code then falls short of being able to convey the complete information about how a buffer is placed in memory, especially when the buffers are to be shared across processes or even IP blocks. Moreover, newer hardware generation may add further usage capabilities for a layout.

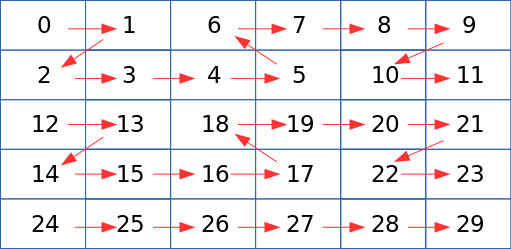

Figure 1. Intel Buffer Tiling Layouts. Memory organization per-tile for two of the Intel-hardware supported buffer tiling layouts. An XMAJOR 4KB tile is stored as an 8×32 WxH array of 16Byte data (row order) while a YMAJOR 4KB tile is laid as 32×8 WxH 16B data (column order).CC-BY-ND from Intel Graphics PRM Vol 5: Memory Views

As an example, modern Intel hardware can use multiple layout types for performance depending on the memory access patterns. The Linear layout places data in row-adjacent format, making the buffer suited for scanline-order traversal. In contrast, the Y-Tiled layout splits and packs pixels in memory such that the geometrically close-by pixels fit into the same cache line, reducing misses for x- and y- adjacent pixel data when sampled – but couldn’t be used for scan-out buffers before the Skylake generation due to hardware constraints. Skylake also allows the single-sampled buffer data to be compressed in-hardware before being passed around to cut down bus bandwidth, with an extra auxiliary buffer to contain the compression metadata. The Intel Memory Views Reference Manual describes these and more layout orders in depth.

Besides access-pattern related optimizations, a similar need for layout communication arises when propagating buffers through the multimedia stack. The msm vidc video decoder (present on some Samsung and Qualcomm SoCs) arranges decoded frames in a tiled variant of the NV12 fourcc format – the NV12_64Z32. With a modifier associated with the buffer, the GPU driver can use the information to program the texture samplers accordingly – as is the case with the a3xx, using the hardware path to avoid explicitly detiling the frame.

Figure 2. Buffer Layout for NV12 Format with Tiled Modifier. The data is laid out as 64×32 WxH tiles similar to NV12, but the tiles appear in a zigzag order instead of linear.

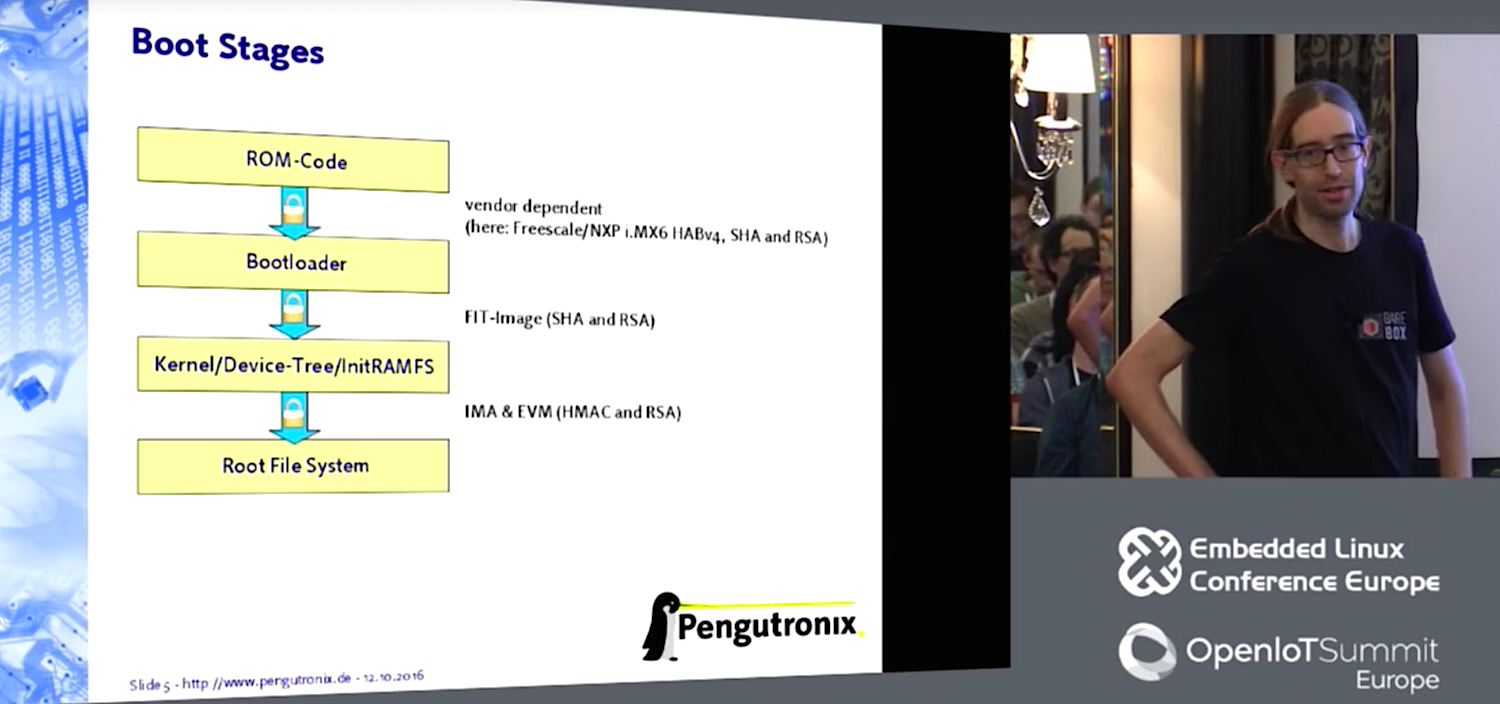

To ease this situation, an extra 64b ‘modifier’ field was added into DRM’s kernel modesetting to contain this vendor specific buffer layout information. These modifier codes are defined in the drm_fourcc.h header file.

Current state of affairs across components

With the AddFB2 ioctl, DRM lets userspace attach a modifier to buffers it imports. For userspace, libdrm support is also planned to allow probing the kernel support and scanout-ability of a given buffer layout, now representable as a fourcc+modifier combination. A modifier aware GetPlane ioctl analog, ‘GetPlane2’ is up for consideration. With recent patches from Intel, GBM becomes capable of allocating modifier-abiding surfaces too, for Wayland compositors and the X11 server to render to.

Collabora and others recently published an EGL extension, EGL_EXT_image_dma_buf_import_modifiers, which makes it possible to create EGLImages out of dmabuf buffers with a format modifier via eglCreateImageKHR, which can then be bound as external textures for the hardware to render into and sample from. It also introduces format and modifier query entrypoints that allow for easier buffer constraint negotiation with knowing the GL capabilities beforehand to avoid hit-and-trial guesswork and bailout scenarios that can arise for compositors.

Mesa provides an implementation for the extension, along with driver support on Skylake to import and sample compressed color buffers. The patchset discussions can be found at mesa-dev: ver1 ver2.

The Wayland protocol has been extended to allow the compositor to advertise platform supported format modifiers to its client applications, with Weston supporting this.

With the full end-to-end client-to-display pipeline now supporting tiled and compressed modes, users can transparently benefit from the reduced memory bandwidth requirements.

Further reads

Some GPUs find the 64b modifier to be too restrictive and require more storage to convey layout and related metadata. AMDGPU associates 256 bytes of information with each texture buffer to describe the buffer layout.

To standardize buffer allocation and cross-platform sharing, the Unix Device Memory Allocation project is being discussed.

Thanks to Google for sponsoring a large part of this work as part of ChromeOS development.