The task of securing Linux systems is so mind-bogglingly complex and involves so many layers of technology that it can easily overwhelm developers. However, there are some fairly straightforward protections you can use at the very core: the kernel. These hardening techniques help developers guard against the bugs that haven’t yet been detected.

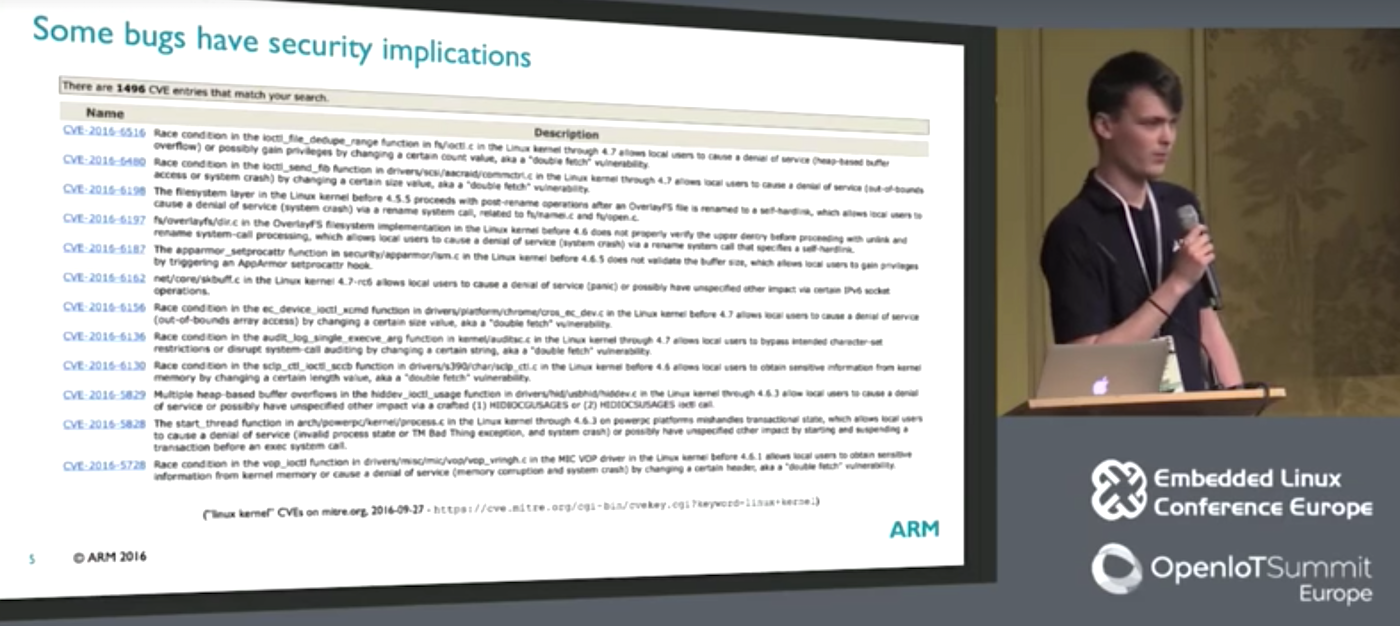

“Hardening is about making bugs more difficult to exploit,” explained Mark Rutland, a kernel developer at ARM Ltd, at the recent Embedded Linux Conference Europe 2016 in Berlin. There will always be dangerous bugs that manage to evade the notice of kernel developers, he added. “We do not yet know which particular bugs exist in the next kernel, and we probably won’t for five years,” he said, referring to Kees Cook’s recent analysis of kernel bug lifetimes.

“We see recurring classes of bugs involving things like dereferencing of null pointers or accessing memory controlled by user space, so we can assume that some of the bugs we don’t know about will fall into these buckets.”

Rutland, who earlier this year warned ELC North America attendees about the hidden dangers of unruly caches, noted that bugs are an unavoidable offshoot of programming. Most are relatively benign, but many cause problems, and some can open dangerous vulnerabilities.

“In the kernel 4.8 merge window we fixed over 500 bugs, many of which were in 4.7 or earlier,” said Rutland, noting that while varied techniques are used today to avoid bugs making it into the kernel, some will inevitably slip through and require later fix-ups. This is “slightly terrifying” given the long lifetime of bugs, which might not be discovered until affected devices are end-of-life.

Some of these bugs have significant security implications. Fortunately, kernel developers have in recent years begun to create hardening features that protect against many of the most common bugs. Rutland implored the audience to make use of these hardening features, noting that many are simple to enable, and their protections are “effectively free,” yet don’t see widespread use.

Rutland went on to discuss several of the main classes of hardening protections that pose the least amount of overhead. Here are some edited quotes about each:

Strict kernel memory permissions – “Historically, the kernel has mapped all memory as readable, writable, and executable…which leads to…being able to modify kernel code or const data, or executing data, all of which…are very useful primitives if you’re an attacker. We can get the MMU to enforce these permissions by…mapping that code as read only or mapping constant data as read only and non- executable. If it’s done in the MMU, it’s effectively free, as the hardware is handling it for us.” (For details, study up on CONFIG_DEBUG_RODATA and CONFIG_DEBUG_SET-MODULE_RONX.)

Stack smashing protection – “Stack smashing attacks work on the principle that stacks contain a return address and other data, as well as local variables. On most architectures, the stack grows downwards, and the buffers grow upwards. If you copy some data to a buffer on the stack, and the data is too large to fit in the buffer, you end up overwriting subsequent data, which happens to include the return address. So if an attacker knows what your stack frame layout will look like, they can control where you will return to, and…branch to any code of their choosing…to launch more advanced attacks. Stack smashing protection guards against this by having the compiler insert a secret value known as a canary between the data and the flow control information.” (For details, see CONFIG_STACKPROTECTOR_REGULAR and CONFIG_STACKPROTECTOR_STRONG.)

User/kernel memory segregation – “Typically the kernel shares an address space with user space in hardware. A pointer can encode an address to either space…using the same load and store instructions. If you accidentally dereference an address…controlled by user space, the hardware won’t notice and will happily give you the value, so if an attacker can convince you to dereference the address…it can be used as the basis for a number of attacks. If an attacker puts a buffer of code in a user space address and then uses a stack smashing exploit to branch to that, they can do whatever they want. The MMU can help by letting us change the page table dynamically, which we use to switch processes. Enabling access temporarily and then disabling access…will catch most of these unintentional user memory accesses or branches.”

Stricter permissions – “Some hardware can…automatically prevent arbitrary code execution from a user space buffer. On ARM we have a feature called privilege execute never (PXN), which…says I never want this page to be executed with kernel privileges. x86 has a similar thing called SMEP. An attacker can still branch to another piece of kernel code, so it doesn’t prevent arbitrary execution, but it limits one case. More recently, MMUs have become able to do this with data accesses as well.”

Rutland advised that it will be “years before we have a reasonable number of protections.” He also noted that the protections are not 100 percent effective, and that “we still have to find and fix bugs.”

In Rutland’s view, Linux systems would be more secure if more of these hardening features were turned on by default, which he said happened in kernel 4.9 with now mandatory, aforementioned DEBUG_RODATA. “Resistance is slowly going away for some of these protections,” he said. “Lots of the complaints about the features not looking like kernel code and doing things wrong are being solved quite quickly. People’s opinions about mainline are changing – there’s agreement that yes, we need to do something here.”

Watch the full video of Rutland’s presentation, “Thwarting Unknown Bugs: Hardening Features in the Mainline Linux Kernel” below:

Embedded Linux Conference + OpenIoT Summit North America will be held on February 21 – 23, 2017 in Portland, Oregon. Check out over 130 sessions on the Linux kernel, embedded development & systems, and the latest on the open Internet of Things.

Linux.com readers can register now with the discount code, LINUXRD5, for 5% off the attendee registration price. Register now>>