As Chair of the Architecture Group of The Linux Foundation’s CE Working Group, Tim Bird has long been the amiable public face of the Embedded Linux Conferences, which he has run for over a decade. At the recent ELC Europe event in Berlin, Bird gave a “Status of Embedded Linux” keynote in which he discussed the good news in areas like GPU support and virtually mapped kernel stacks, as well as the slow progress in boot time, system size, and other areas that might help Linux compete with RTOSes in IoT leaf nodes.

Bird also opened ELCE with welcoming remarks and closed it with a Closing Game trivia show. Did you know that Linus Torvalds was once bitten by a penguin, or that his father was a member of the European parliament? Or that Linux has not yet made it to the surface of Mars? Now you do. (See the video below.)

Bird launched his talk by noting the improving cadence consistency of kernel releases, now running between 63 and 70 days. More good news: When Greg-Kroah Hartman, who Bird interviewed in an ELCE fireside chat, announced the next LTS release in advance for the first time, developers restrained themselves from rushing to cram patches into it. Kernel v4.9 LTS is due in early December.

Indeed, Linux has matured, as befits an OS that by some counts has been injected into 1.5 billion objects. Bird thinks it may actually be more than 2 billion by now, although nobody knows for sure.

In any case, the status of embedded Linux is “great,” says Bird. That doesn’t stop him from worrying about the future. “Everyone knows IoT gateways are going to run Linux, but I worry that Linux is not going to run on those 9 billion leaf nodes they’re expecting for IoT,” he said. “I worry that Linux won’t be the first OS running Minecraft on a cereal box.”

To achieve the tuxified cereal box of his dreams, Bird estimates that costs must be reduced to $1.10. Half of that would go to the display, while 40 percent would be consumed by CPU, RAM, and flash. The rest would cover a battery and input device. “Today we’re still at $5 for CPU and memory alone,” he noted.

The point is that RTOSes are likely to get there first. Bird, who is a Senior Software Engineer at Sony, mentioned a recent Sony audio player project in which Nuttx beat out Linux because “it’s easier to add stuff to Nuttx than to trim down Linux.”

Even if Linux may not beat RTOSes to the cereal box market, it troubles Bird that Linux is not being more aggressively extended to capture more of the IoT endpoint market. “There’s been a ton of driver work on CPUs, GPUs, and embedded devices in this year’s kernels, which is great,” said Bird, “but not much on features like boot time, system size, or embedded filesystems.”

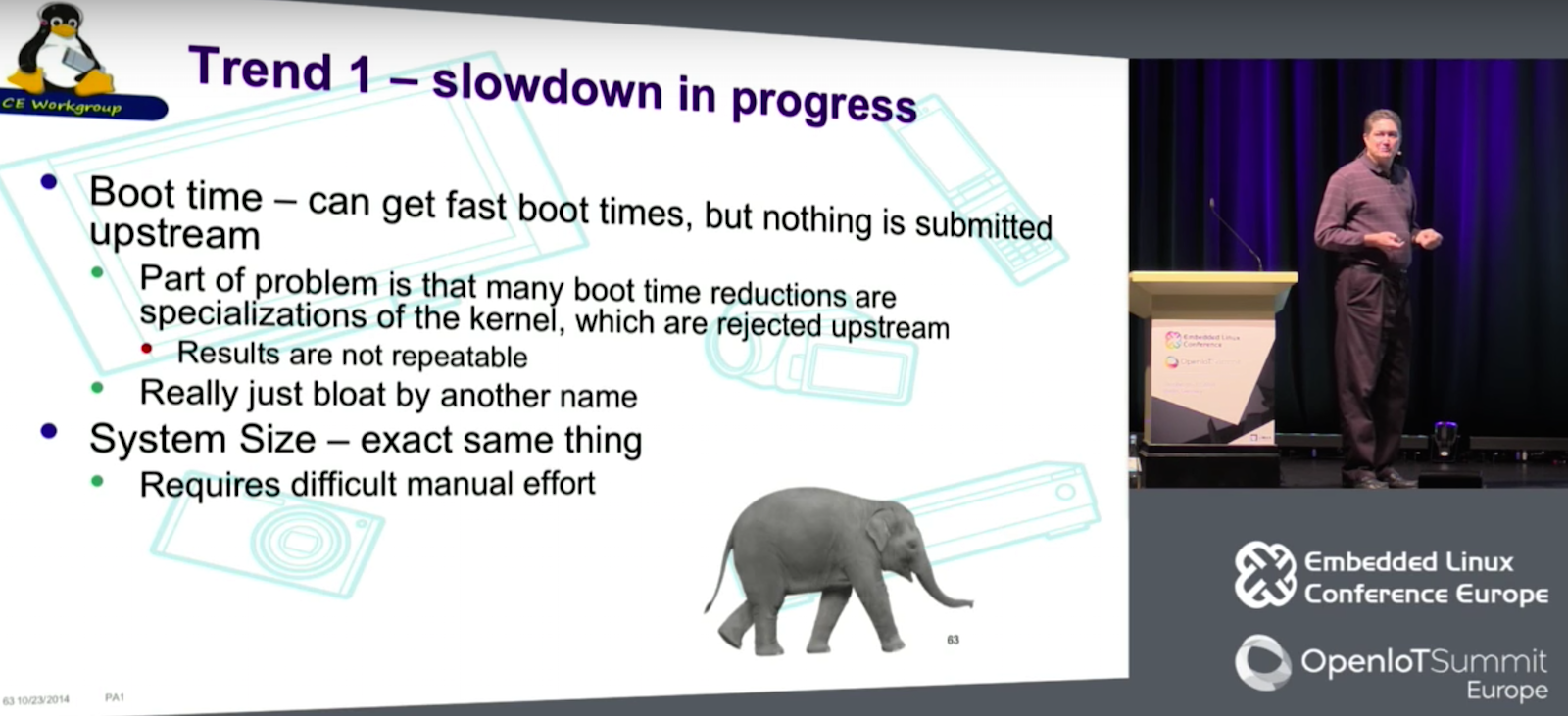

Bird noted a decrease in both kernel submissions and ELC and ELCE talks on topics that dominated the first few years of ELC: boot time, system size, file systems, power management, real-time, and security. While numerous lightweight Linux distros have emerged for IoT, there has been little progress on the kernel side to reduce footprint. Not much is going on with the Linux Kernel Tinification or Linux Tiny projects. “We haven’t seen much new since Linux 4.1 when they got rid of users and groups, saving about 25K.”

If embedded open source development continues to expand beyond Linux to RTOSes like Nuttx, FreeRTOS, Mbed, and the Linux Foundation’s Zephyr, fragmentation will only increase, argued Bird. “We already have way too many embedded Linux distributions. That makes it hard to share non-kernel stuff like system-wide and feedback-directed optimizations or security enhancements. We need to find ways to share our package management and our test capabilities.” Bird is hardly anti-RTOS however, stating: “It’s a really big deal that Linaro announced support for Zephyr.”

As IoT developers increasingly work with both Linux and RTOSes, there are not only more technologies to integrate, but also the permissive non-GPL licenses such as BSD that are increasingly used by RTOSes. “We have too many OSes with different licenses,” said Bird, before recommending one admittedly controversial response: dual-licensing code for GPL and BSD.

Generalization vs. specialization

All these issues are played out against a struggle in embedded Linux between generalization and specialization. “In open source we want to generalize to get the network effects of a big group of collaborators,” said Bird. “The device tree is moving the kernel toward greater generalization, with drivers written to handle all possible IP block configurations across multiple CPUs. But in embedded we want to specialize and make our devices as efficient, power-light, and cost effective as possible. But then you lose that community effect.”

This tension has limited the progress of technologies like faster boot times and smaller footprints. “We can do fast boot, but most techniques use kernel specializations that are rejected upstream. Boot time is unique per platform, and reductions tend not to be mainlinable.” The problem is that improvements like fast boot and Linux tinification require subtractive vs. additive engineering. “If you try to rip Linux apart, you end up with Franken-Linux. You can’t pull the pieces apart cleanly.”

Bird has recently been deeply involved in testing automation, where he is leading an LTSI project called Fuego. “Every company builds up their own test, which leads to fragmentation,” said Bird. “Testing automation could help make up for some of the loss of community involved with specialized software. We need to share not only test packages but test experience.”

Staying true to open source principles can help solve these challenges, said Bird. “Look at other projects to find commonality. Find a way to share ideas at a minimum and code if you can. And keep working on upstreaming.”

Highlights of Embedded Linux 2016

In addition to grappling with the big picture, Bird gave a detailed breakdown of embedded Linux progress in specific segments. He also summarized the embedded highlights of recent kernel releases, such as LightNVM in Linux 4.4, ARM multiplatform support in Linux 4.5, and a timer wheel update in Linux 4.8.

Linux 4.9 will bring a technology called virtually mapped kernel stacks, which helps detect stack overruns, clean up kernel code, and speed process creation. “Being able to catch stack overflows inside the kernel is a huge deal,” said Bird. “It’s a level of robustness we’ve never seen before.”

Here’s a run-through of some 2016 embedded Linux trends highlighted by Bird, both inside and outside the kernel project:

Boot-up Time – As noted, not much is shaking. Intel’s XIP (eXecute-In-Place) for x86 “was welcome,” but “asynchronous probing didn’t really go anywhere.”

Device Trees – “Overlays seem to be working as intended,” but validation is stalled. Updating the device tree spec is under discussion.

Graphics – Vulkan API v1.0 from Khronos Group provided a welcome alternative to Direct3D or OpenGL with less CPU and GPU overhead. “AMD plans to open source the driver, and Intel and Valve are already working on it. Nvidia supports it.” The bad news: Qt changed its license from LGPL 2.0 to 3.0, which is “undesirable for many consumer electronics products,” said Bird. “It has a lot of people in our industry worried.”

GPUs – “Freedreno (Adreno) and Etnaviv (Vivante) have really made progress with free drivers.” There’s also been work on the Raspberry Pi’s Broadcom VC4 GPU. The bad news: there’s nothing new from the Lima project (ARM Mali), and nothing yet on the PowerVR front.

File systems – To address the trend toward opaque “black-box” block-based storage used in eMMC, solutions have emerged like LightNVM, a framework for holding SSD parameters. LightNVM allows the kernel to “move the flash translation layer from the black-box hardware up into the software where you have visibility.” Also: “Free Electrons is doing some good work on UBIFS handling of MLC NAND.”

Networking – Bluetooth 4.2 support added better security, faster speed, and 6LoWPAN mesh networking integration. There has also been work on IoT protocols like Thread.

Real-time Linux – The latest RT-preempt was released with Linux 4.8, and “Thomas Gleixner says there are only 10K lines left. I think it will be more than that.” Also, Xenomai 3.0.1 has arrived with a new Cobalt core.

Security – Not much transpired in 2016. However: “A new kernel security hardening project is addressing classes of problems instead of individual bugfixes.”

System Size – Not much happened in 2016, although the XIP patches helped out here as well. Going forward: “Nicolas Pitre is doing some interesting work on gcc –gc-sections, and Vitaly Wool is working on stuff.”

Testing – There has been plenty of work done on Kselftest, LAVA V2, Fuego, and Kernelci.org, which Bird calls “the most successful, public, distributed Linux test system in the world.”

Toolchain – “Khem Raj is doing interesting work in Yocto Project for Clang.”

Tracing – eBPF is being used for dynamic tracing, and there’s a new tracefs filesystem, which is no longer part of debugfs. There’s also been work on Ftrace histogram triggers.

For more information, watch the complete video below.

Embedded Linux Conference + OpenIoT Summit North America will be held on February 21 – 23, 2017 in Portland, Oregon. Check out over 130 sessions on the Linux kernel, embedded development & systems, and the latest on the open Internet of Things.

Linux.com readers can register now with the discount code, LINUXRD5, for 5% off the attendee registration price. Register now>>