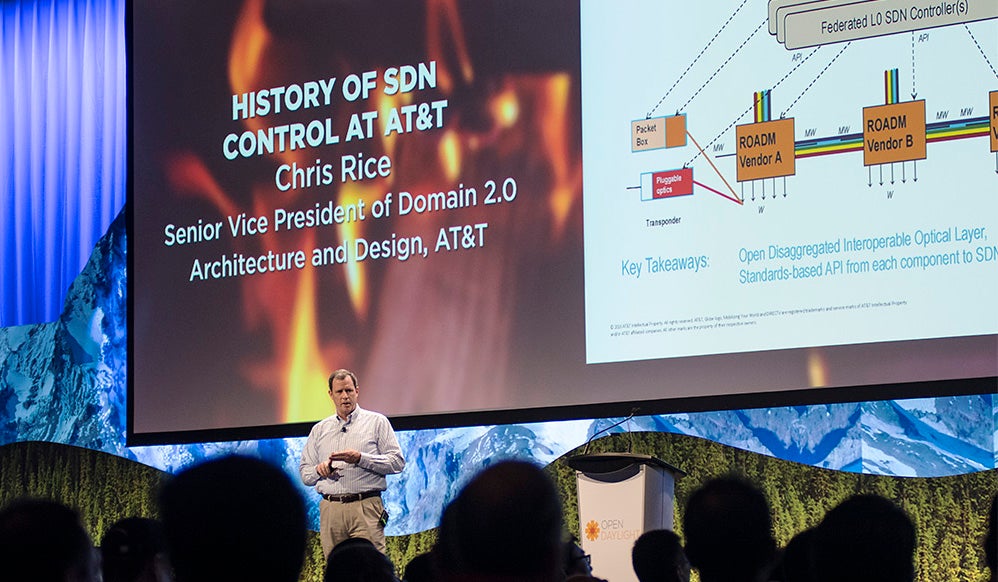

For many years AT&T has been on the forefront of virtualizing a Tier 1 carrier network. They’ve done so in a very open fashion and are actively participating in, and driving, many open sources initiatives. Their open initiatives include Domain 2.0, ECOMP, and CORD, all of which are driving innovation in the global service provider market. Chris Rice, Sr. VP of Domain 2.0 Architecture and Design of AT&T, provided an overview of how AT&T got where they are today during his keynote address at the ODL Summit.

Providing a bit of history of this journey, Rice noted that today’s implementations and visions started years ago. One of the first steps was the creation of what he called a router farm, which was initiated because of the end of life of a router and there wasn’t a new router that could just take its place. The goal was to remove the static relationship between the edge router and the customer. Once this was done, AT&T could provide better resiliency to their customers, detect failures, do planned maintenance, and schedule backups. They could also move configurations from one router to another vendor’s router. The result was faster and cheaper; however, “it just wasn’t as reusable as they wanted.” They learned the importance of separating services from the network and from the devices.

About three and a half years ago, the greater community and ecosystem started to address many of the company’s key concerns. For example, Rice noted that Intel was continuing to improve the packet processing performance of their general purpose CPUs, resulting in a 100 times improvement over a 10-year period. What they concluded was that “next-generation carrier networks must be: cloud-based, model-driven, and software defined.” He acknowledged that today this might not sound exciting but three and a half years ago, it was insightful.

ECOMP and VNF

During this time ECOMP (Enhanced Control, Orchestration, Management and Policy) was born. Rice noted that ECOMP is “not a science project.” It was fully vetted and has been used in production networks for the past 2 years. Today, ECOMP comprises more than 8.5 million lines of code and is growing every day. Rice also noted that currently 5.7 percent of AT&T’s network is virtualized and the goal is for 30 percent to be virtualized by the end of 2016.

The Layer 2/3 SDN controller within ECOMP is based on ODL and includes a Layer 4-7 VNF management and application controller. Rice noted that a key takeaway was that ODL can be used at all layers of the OSI Stack. The layer 4-7 application controller is used to initialize and configure virtual network functions (VNFs), automate the lifecycle of VNFs, and to correct and monitor faults and failures of application components. He pointed out that all of this is being achieved in a vendor and VNF agnostic mechanism.

Legos Not Snowflakes

Perhaps the biggest takeaway from Rice’s keynote was his comment that today’s VNFs are “snowflakes and we want Lego blocks not snowflakes.” Lego blocks come in different shapes and colors yet they all interoperate. And, if each VNF is unique, it requires a costly and time consuming “one off” integration effort. With the Lego model, they can build a framework or a foundation once and add or replace interoperable VNFs as required.

There are functions that all VNFs must support regardless of their specific functionality. Rice noted that AT&T must be able to configure, test, scale, start, stop, restart, and rebuild every VNF no matter what its specific function. The industry as a whole, he continued, must work to normalize VNFs to support the common operational framework, the Lego model. To illustrate their success in this model, Rice discussed their work with the optical transport part of their network. Using their methodology, AT&T has achieved end-to-end multi-vendor interoperability and can configuring and reconfiguring multiple vendors ROADMs.

Rice finished his talk by highlighting “why ODL.” First, ODL controllers are platforms for innovation. The innovation occurs in both the way networks are built and in the way services are designed. Second, programmable controllers, such as within ODL, must support a rich set of both Northbound/Southbound interfaces as well as East/West capabilities. Rice noted here that the support for what he referred to as “brownfield” protocols is a mandatory requirement. Third, Rice called on the ODL community to focus on reliability and on scale and specifically called out the need for geographical redundancy.