Part 3 had us running our Kr8sswordz Puzzle app, spinning up multiple instances for a load test, and watching Kubernetes gracefully balance numerous requests across the cluster.

Though we set up Jenkins for use with our Hello-Kenzan app in Part 2, we have yet to set up CI/CD hooks for the Kr8sswordz Puzzle app. Part 4 will walk through this set up. We will use a Jenkins 2.0 Pipeline script for the Kr8sswordz Puzzle app, this time with an important difference: triggering builds based on an update to the forked Git repo. The walkthrough will simulate updating our application with a feature change by pushing the code to Git, which will trigger the Jenkins build process to kick off. In a real world lifecycle, this automation enables developers to simply push code to a specific branch to then have their app build, push, and deploy to a specific environment.

Read the previous articles in the series:

|

This tutorial only runs locally in Minikube and will not work on the cloud. You’ll need a computer running an up-to-date version of Linux or macOS. Optimally, it should have 16 GB of RAM. Minimally, it should have 8 GB of RAM. For best performance, reboot your computer and keep the number of running apps to a minimum.

|

Creating a Kr8sswordz Pipeline in Jenkins

Before you begin, you’ll want to make sure you’ve run through the steps in Parts 1, 2, and 3 so that you have all the components we previously built in Kubernetes (to do so quickly, you can run the automated scripts detailed below). For this tutorial we are assuming that Minikube is still up and running with all the pods from Part 3.

We are ready to create a new pipeline specifically for the puzzle service. This will allow us to quickly re-deploy the service as a part of CI/CD.

1. Enter the following terminal command to open the Jenkins UI in a web browser. Log in to Jenkins using the username and password you previously set up.

minikube service jenkins

2. We’ll want to create a new pipeline for the puzzle service that we previously deployed. On the left in Jenkins, click New Item.

|

For simplicity we’re only going to create a pipeline for the puzzle service, but we’ve provided Jenkinsfiles for all the rest of the services, in order to allow the application to be fully CI/CD capable.

|

3. Enter the item name as Puzzle-Service, click Pipeline, and click OK.

4. Under the Build Triggers section, select Poll SCM. For the Schedule, enter the string H/5 * * * * which will poll the Git repo every 5 minutes for changes.

5. In the Pipeline section, change the following.

a. Definition: Pipeline script from SCM

b. SCM: Git

c. Repository URL: Enter the URL for your forked Git repository

d. Script Path: applications/puzzle/Jenkinsfile

|

Remember in Part 3 we had to manually replace the $BUILD_TAG env var with the git commit ID? The Kubernetes Continuous Deploy plugin we’re using in Jenkins will automatically find variables in K8s manifest files ($VARIABLE or ${VARIABLE}) and replace them with environment variables pre-configured for the pipeline in the Jenkinsfile. Variable substitution is a functionality Kubernetes lacks in v1.11.0, however the Kubernetes CD plugin as a third party tool provides us with it.

|

6. When you are finished, click Save. On the left, click Build Now to run the new pipeline. This will rebuild the image from the registry, and redeploy the puzzle pod. You should see it successfully run through the build, push, and deploy steps in a few minutes.

Our Puzzle-Service pipeline is now setup to poll the Git repo for changes every 5 minutes and kick off a build if changes are detected.

Pushing a Feature Update Through the Pipeline

Now let’s make a single change that will trigger our pipeline and rebuild the puzzle-service.

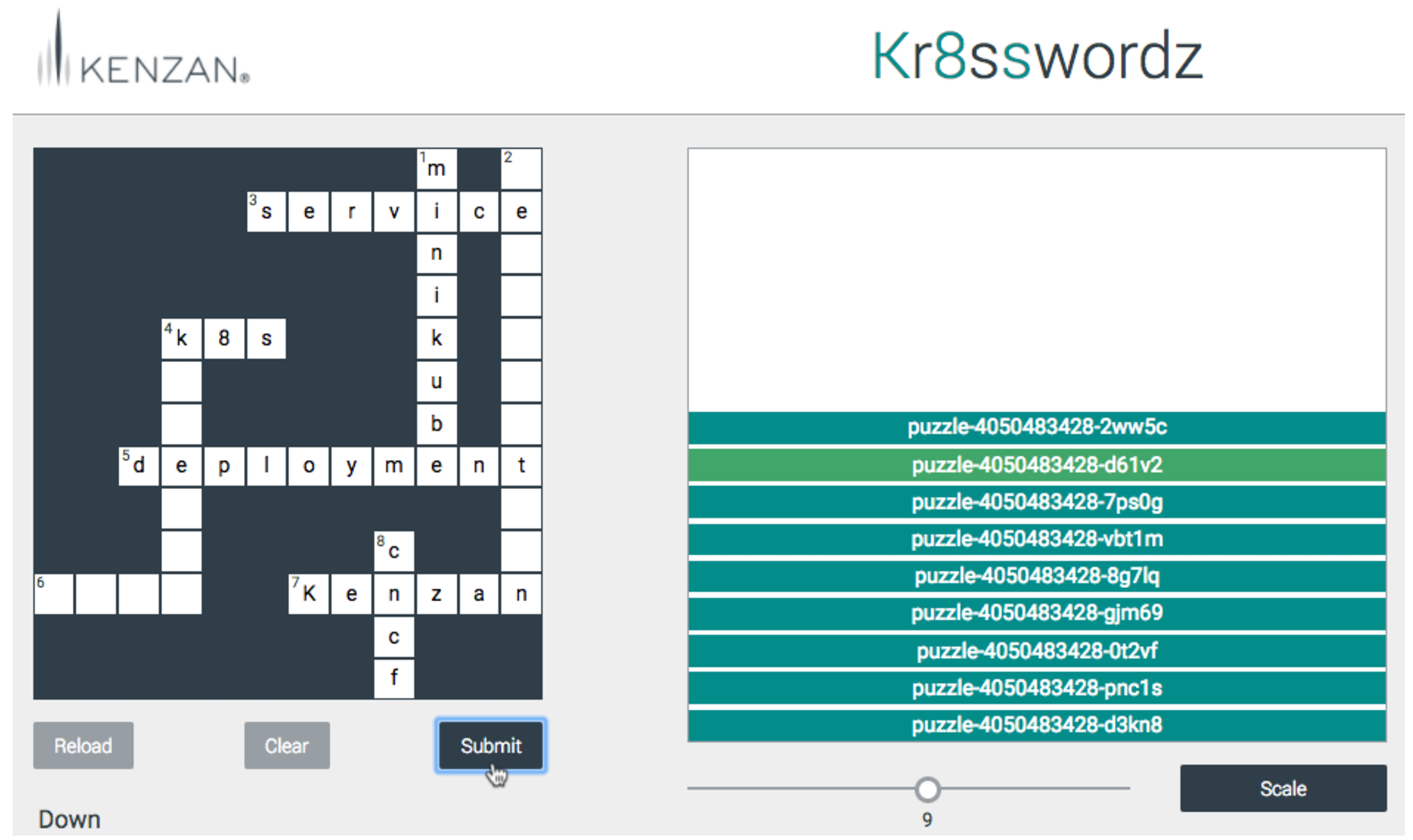

On our current Kr8sswordz Puzzle app, hits against the puzzle services show up as white in the UI when pressing Reload or performing a Load Test:

However, you may have seen that the same white hit does not light up when clicking the Submit button. We are going to remedy this with an update to the code.

7. In a terminal, open up the Kr8sswordz Puzzle app. (If you don’t see the puzzle, you might need to refresh your browser.)

minikube service kr8sswordz

8. Spin up several instances of the puzzle service by moving the slider to the right and clicking Scale. For reference, click Submit and notice that the white hit does not register on the puzzle services.

|

If you did not allocate 8 GB of memory to Minikube, we suggest not exceeding 6 scaled instances using the slider.

|

9. Edit applications/puzzle/common/models/crossword.js in your favorite text editor, or edit it in nano using the commands below.

cd ~/kubernetes-ci-cd

nano applications/puzzle/common/models/crossword.js

You’ll see the following commented section on lines 42-43:

// Part 4: Uncomment the next line to enable puzzle pod

highlighting when clicking the Submit button

//fireHit();

Uncomment line 43 by deleting the forward slashes, then save the file. (In nano, Press Ctrl+X to close the file, type Y to confirm the filename, and press Enter to write the changes to the file.)

10. Commit and push the change to your forked Git repo (you may need to enter your GitHub credentials):

git commit -am "Enabled hit highlighting on Submit"

git push

11. In Jenkins, open up the Puzzle-Service pipeline and wait until it triggers a build. It should trigger every 5 minutes. (If it doesn’t trigger right away, give it some time.)

12. After it triggers, observe how the puzzle services disappear in the Kr8sswordz Puzzle app, and how new ones take their place.

13. Try clicking Submit to test that hits now register as white.

If you see one of the puzzle instances light up, it means you’ve successfully set up a CI/CD pipeline that automatically builds, pushes, and deploys code changes to a pod in Kubernetes. It’s okay—go ahead and bask in the glory for a minute.

You’ve completed Part 4 and finished Kenzan’s blog series on CI/CD with Kubernetes!

From a development perspective, it’s worth mentioning a few things that might be done differently in a real-world scenario with our pipeline:

-

You would likely have separate repositories for each of the services that compose the Kr8sswordz Puzzle to enforce separation for microservice develop/build/deploy. Here we’ve combined all services in one repo for ease of use with the tutorial.

-

You would also set up individual pipelines for the monitor-scale and kr8sswordz services. Jenkins files for these services are actually included in the repository, though for the purpose of the tutorial we’ve kept things simple with a single pipeline to demonstrate CI/CD.

-

You would likely set up separate pipelines for each deployment environment, such as Dev, QA, Stage, and Prod environments. For triggering builds for these environments, you could use different Git branches that represent the environments you push code to. (For example, dev branch > deploy to Dev, master branch > deploy to QA, etc.)

-

Though easy to set up, the SCM Polling operation is somewhat resource intensive as it requires Jenkins to scan the entire repo for changes. An alternative is to use the Jenkins Github plugin on your Jenkins server.

Automated Scripts

If you need to walk through the steps we did again (or do so quickly), we’ve provided npm scripts that will automate running the same commands in a terminal.

-

To use the automated scripts, you’ll need to install NodeJS and npm.

On Linux, follow the NodeJS installation steps for your distribution. To quickly install NodeJS and npm on Ubuntu 16.04 or higher, use the following terminal commands.

a. curl -sL https://deb.nodesource.com/setup_7.x | sudo -E bash -

b. sudo apt-get install -y nodejs

On macOS, download the NodeJS installer, and then double-click the .pkg file to install NodeJS and npm.

2. Change directories to the cloned repository and install the interactive tutorial script:

a. cd ~/kubernetes-ci-cd

b. npm install

3. Start the script

npm run part1 (or part2, part3, part4 of the blog series)

4. Press Enter to proceed running each command.

|

Building the Kr8sswordz Puzzle app has shown us some pretty cool continuous integration and container management patterns:

-

How infrastructure such as Jenkins or image repositories can run as pods in Kubernetes.

-

How Kubernetes handles scaling, load balancing, and automatic healing of pods.

-

How Jenkin’s 2.0 Pipeline scripts can be used to automatically run on a Git commit to build the container image, push it to repository, and deploy it as a pod in Kubernetes.

If you are interested in going deeper into the CI/CD Pipeline process with deployment tools like Spinnaker, see Kenzan’s paper Image is Everything: Continuous Delivery with Kubernetes and Spinnaker.

Kenzan is a software engineering and full service consulting firm that provides customized, end-to-end solutions that drive change through digital transformation. Combining leadership with technical expertise, Kenzan works with partners and clients to craft solutions that leverage cutting-edge technology, from ideation to development and delivery. Specializing in application and platform development, architecture consulting, and digital transformation, Kenzan empowers companies to put technology first.

This article was revised and updated by David Zuluaga, a front end developer at Kenzan. He was born and raised in Colombia, where he studied his BE in Systems Engineering. After moving to the United States, he studied received his master’s degree in computer science at Maharishi University of Management. David has been working at Kenzan for four years, dynamically moving throughout a wide range of areas of technology, from front-end and back-end development to platform and cloud computing. David’s also helped design and deliver training sessions on Microservices for multiple client teams.

Curious to learn more about Kubernetes? Enroll in Introduction to Kubernetes, a FREE training course from The Linux Foundation, hosted on edX.org.