The recent emergence of the Internet of Things (IoT) and associated applications into the mainstream means more developers will be required to develop these systems in the coming years. Developers without traditional embedded systems backgrounds are being asked to build applications to meet consumer demand and many of these developers are looking for quick ways to get started.

This post will detail some quick methods for prototyping IoT applications without needing to understand traditional embedded systems details such as bootloaders, device drivers and OS kernels. Using readily available OS images will allow developers to bring up new platforms and quickly focus on IoT features while deferring hardware specifics to later in the development cycle.

Note that the focus of this discussion is applications that are suitable for a 32- or 64- bit system running Linux and will not discuss smaller systems more suitable for an RTOS.

Application Architecture

The application we will develop here is similar in scope to many existing IoT designs, albeit extremely simplified. The goal is to familiarize readers with the general workflow and to create something to be used as a jumping off point for future development.

We will be developing a weather station application which are extremely common in the maker community. We will customize our application code to produce two classes of systems common in IoT designs; sensors and actuators. Our sensor systems will act as remote devices that return weather data to a central location. Our actuator systems will read the weather data and affect changes in their environment, such as darkening windows, based on the weather readings. The devices in our fleet will communicate with each other to implement the desired IoT application. Note that the actual weather sensing devices and actuators will be stub implementations so that readers can replicate this without needing custom hardware but it should be reasonably straightforward to link in physical devices if they are available.

Initial Application Development

Many of the libraries, networking protocols, and languages needed for developing IoT applications are available on your PC Linux installation provided by your distribution. For features that are not dependent on the target hardware, it makes sense to do as much development as possible on your PC system. As a developer, you are likely very comfortable in that environment and have the tools you need installed and configured to your liking. Additionally, this system will be significantly more powerful than your target board and your development cycles will be reduced.

Note that the examples shown here were run on an Ubuntu 18.04 system but similar steps should be available on most host OS distributions.

The first step is to download the code examples and install the necessary Python libraries on your PC host. Clone the following git repository to access the sample files:

https://github.com/drewmoseley/iot-mqtt-bbb.git

The two scripts, also shown in listings 1 and 2, represent code running separately on an actuator device and a sensor device.

#!/usr/bin/python

import paho.mqtt.client as mqtt

def onConnect(client, obj, flags, rc):

print("Connected. rc = %s " % rc)

client.subscribe("iot-bbb-example/weather/temperature")

client.subscribe("iot-bbb-example/weather/precipitation")

# Dummy function to act on temperature data

def temperatureActuator(temperature):

print("Temperature = %sn" % temperature)

# Dummy function to act on precipitation data

def precipitationActuator(precipitation):

action = {

"rain" : "Grab an umbrella",

"sun" : "Don't forget the sunscreen",

"hurricane" : "Buy bread, water and peanut butter."

}

print("Precipitation = %s" % precipitation)

print("t%sn" % action[precipitation])

def onMessage(mqttc, obj, msg):

callbacks = {

"iot-bbb-example/weather/temperature" : temperatureActuator,

"iot-bbb-example/weather/precipitation" : precipitationActuator

}

callbacks[msg.topic](msg.payload)

client = mqtt.Client()

client.on_connect = onConnect

client.on_message = onMessage

client.connect("test.mosquitto.org", 1883, 60)

client.loop_forever()

Listing 1: Actuator Sample

#!/usr/bin/python

import paho.mqtt.publish as mqtt

import time

import random

# Dummy function to read from a temperature sensor.

def readTemp():

return random.randint(80,100)

# Deumm function to read from a rain sensor.

def readPrecipitation():

r = random.randint(0,10)

if r < 4:

return 'rain'

elif r < 8:

return 'sun'

else:

return 'hurricane'

while True:

mqtt.single("iot-bbb-example/weather/temperature", readTemp(), hostname="test.mosquitto.org")

mqtt.single("iot-bbb-example/weather/precipitation", readPrecipitation(), hostname="test.mosquitto.org")

time.sleep(10)

Listing 2: Sensor Sample

Now, let’s install python and the required libraries. Note that your system may need additional libraries.

$ sudo apt install python python-paho-mqtt

Finally, we can invoke the scripts. In this case we are simply using two terminal windows on our PC system but these could just as well have been executed on any two internet connected machines. Every 10 seconds, the sensor script will generate dummy weather data which is then passed to the actuator script over MQTT. For now the only action taken is to print a pithy message to standard output. Press ctrl-c to exit from these scripts.

$ python ./iot-mqtt-bbb-sensor.py

Listing 3: Server/sensor invocation

$ python ./iot-mqtt-bbb-actuator.py

Connected. rc = 0

Temperature = 86

Precipitation = hurricane

Buy bread, water and peanut butter.

Temperature = 96

Precipitation = sun

Don't forget the sunscreen

Temperature = 84

Precipitation = rain

Grab an umbrella

Listing 4: Client/actuator invocation

On-Target Development

Now that we have some working code on our PC system, we can turn our attention to the desired target board. In this case, we are using the Beaglebone Black. Some useful reference links regarding this platform are as follows:

The first step is to configure an SD Card with the Debian image. Download the latest IoT Debian image from https://beagleboard.org/latest-images.This image can be written to your SD Card with the Etcher utility.

Once you have created the SD Card, insert it into your Beaglebone Black system and connect an ethernet cable. Now, press and hold the Boot Button (S2) near the SD card slot, and connect the power adapter. Holding switch S2, in this case, forces the system to boot off of the SD Card rather than the onboard eMMC. For more details, Adafruit has good instructions.

There are two main interfaces for development on the Beaglebone.

-

HDMI + USB Keyboard: this method uses hardware you likely already have but can be a bit limiting due to the limited horsepower of the Beaglebone compared to your PC system. Additionally, for this exercise, we are using the IoT image provided by beagleboard.org which does not have a graphical environment. Note that the Beaglebone black has a micro-HDMI port and thus will likely require a custom cable or adapter.

-

Serial console: this method uses the venerable RS-232 serial ports and protocols to allow you to have a text-mode connection to the Beaglebone. This requires an appropriate cable and a serial terminal emulator on your PC. For Windows systems, Putty is a good choice. For MacOS systems, Serial is a good choice. Command line programs such as Picocom and Minicom are available for most Linux distributions as well as Windows and MacOS.

Once you have setup your chosen interface to the Beaglebone, you will see a login prompt. The default username is “debian” with a password of “temppwd”. After logging in, set a custom password. This simple step will make your device more secure than many commercially available IoT devices. Devices with well known, default credentials are the root cause for issues such as the Mirai botnet.

debian@beaglebone:~$ passwd

Changing password for debian.

(current) UNIX password:

Enter new UNIX password:

Retype new UNIX password:

passwd: password updated successfully

Listing 5: Change default password

Now, let’s make sure the system is running the latest Debian updates. This is another IoT security best practice.

debian@beaglebone:~$ sudo apt-get update

debian@beaglebone:~$ sudo apt-get upgrade

debian@beaglebone:~$ sudo reboot

Listing 6: Upgrade all packages

The dependencies needed on the Debian/BBB system are a bit different. There is not a standard Debian package available containing the paho-mqtt library, so we use the standard Python pip package manager instead.

debian@beaglebone:~$ sudo apt-get install python python-pip

debian@beaglebone:~$ pip install paho-mqtt

Listing 7: Installing dependencies on target system

Now simply transfer the python scripts to the target and invoke them as done above. You can use scp, wget, curl or many other mechanisms to deploy the scripts onto your device. Similar to our testing on the PC above, we run two text mode logins to invoke the scripts in separate windows. The output from these is identical to listings 3 and 4 above.

Field Deployment Considerations

Now that we have a working application in the tightly controlled environment that is our local lab, we need to consider what will change as our devices are deployed. The issues discussed here are general in nature and your application use cases will certainly dictate other considerations.

Network Connectivity

The first consideration is to understand what the network connectivity will be in your deployed location. In the exercise above, we used a wired ethernet since the Beaglebone Black does not have a WiFi adapter. It is possible to use a USB-WiFi adapter, and certain versions of the Beaglebone hardware have WiFi built in. If you plan to use WiFi, you will need to develop a mechanism for the end user of the device to specify the WiFi credentials. Both WiFi and wired ethernet connections are well supported in Linux on most IoT hardware and provide a reasonable data rate. Obviously, the speed will ultimately be dictated by the networking provider. However, this type of connection is typically not metered (at least in the United States) so concerns over runaway billing cycles are somewhat mitigated.

IoT applications have other choices for connectivity as well. Some applications use cellular phone data protocols and include a SIM card for connecting to a provider’s network. Depending on the protocol supported by your carrier, and the location of your deployment, you may need to consider throughput and bulk data costs in your system design. Cellular connections are popular in IoT applications due to their ubiquity and relative ease of remote deployment.

Other connectivity options can be considered based on your application requirements:

-

Bluetooth/Bluetooth Low Energy: this is useful if your end device does not need a full internet connection and has a gateway device available through which the IoT data of interest can be proxied. This is also commonly used for mesh networks.

-

LoRa®/LoRaWAN™: this is a city-scale wireless protocol that is being developed and governed by an industry alliance. Availability is limited but if your rollout plans are in a geography supported by a LoRa network then this is a good choice.

-

Sigfox: this is another city-scale wireless protocol but is controlled by a single commercial entity. Again, availability is limited but this may work depending on your needs.

Environment Hostility

Typically, IoT devices are managed and configured over a web interface or a custom application on your desktop or mobile device. Extreme care must be taken and security professionals must be consulted on the design of this portion of your system. Decisions will need to be made on what devices are able to access the configuration interface and whether those devices need to be on the local network or if they will be able to access the device over the internet.

Additionally, IoT devices are regularly installed in uncontrolled environments, such as coffee shops and restaurants, and as such are vulnerable to a wide range of attacks including hostile actors on the local network as well as physical attacks.

Default Credentials

Recent attempts at legislation in the United States have tried to regulate IoT devices to help reduce the likelihood of attacks causing massive disruptions of the internet. The main concrete proposal is that devices must not share initial login and password values. Either a unique set of credentials should be generated for each device in manufacturing and provided to the purchaser, or some mechanism of forcing the end user to set up a username and/or password should be considered a mandatory requirement in your design.

Physical Access

As mentioned above, the fact that many of these devices are deployed in environments outside of the manufacturers control results in a wide range of potential attack vectors. Some attacks require physical access to vulnerable devices. These can involve offline access to device storage, rebooting devices into manufacturing modes and simple denial of service by disconnecting or unplugging devices. Storage media encryption should be used to ensure that offline access to the data is not possible. Secure hardware mechanisms such as Trusted Platform Module and Arm TrustZone should be reviewed to determine if they are applicable for your application.

Device Updatability

Finally, the simple fact is that all software has bugs. And more software has more bugs. The amount of code that is running on today’s IoT devices is staggering. Not all bugs will be vulnerabilities leading to exploits, but it should be taken as a given that you will need to provide updates to your deployed devices.

In addition to providing fixes for bugs (and likely security vulnerabilities), providing a strong over-the-air (OTA) update mechanism allows you to deploy new features to your end users. Many minimum viable products are also released in order to get to market quickly with the intent of updating them later with more comprehensive features.

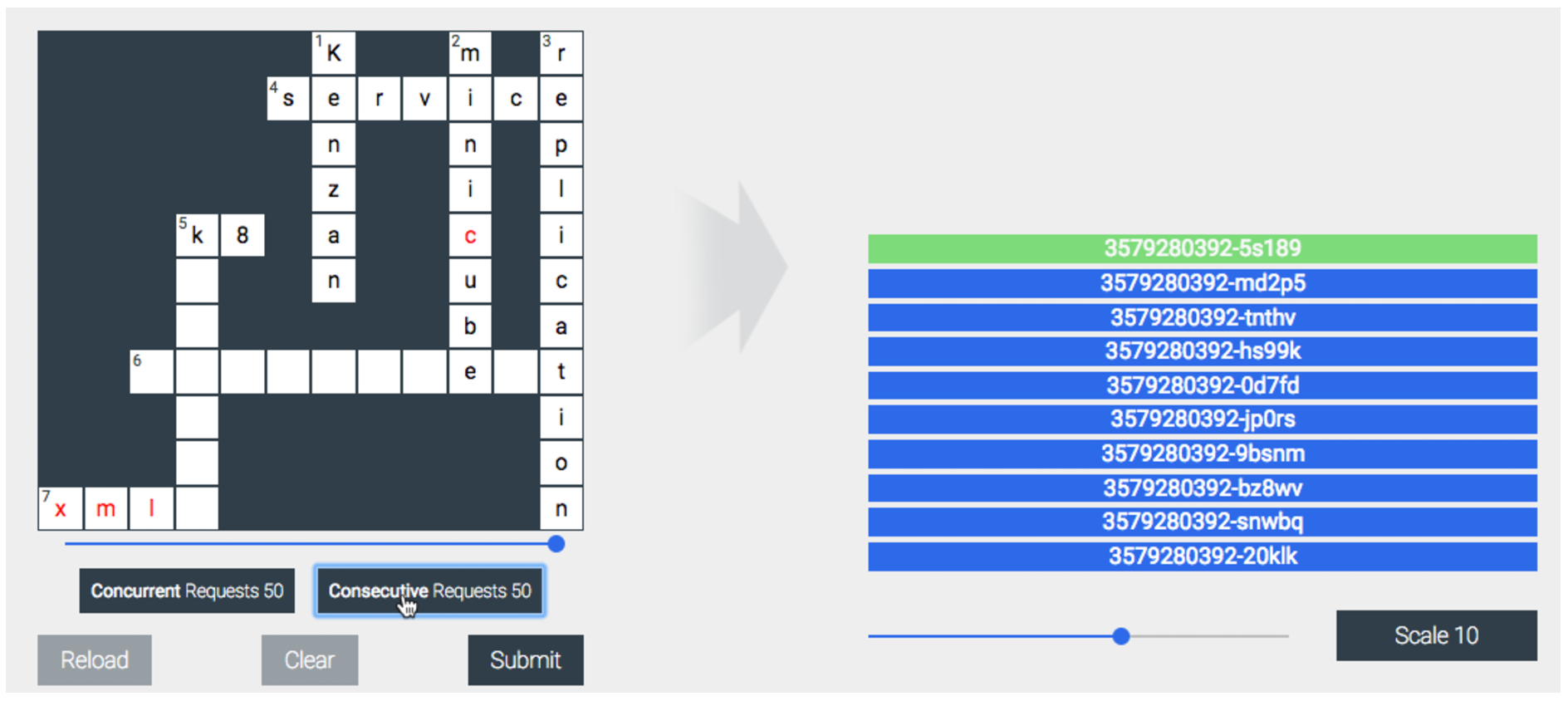

A few characteristics that should be considered when reviewing update solutions are as follows:

-

Security: does the solution in question follow industry best practices for certificate and encryption management. Is there active development of the solution resolving security issues within the update technology?

-

Robust: what is the risk of bricking devices due to a failed or interrupted update?

-

Fleet management: what is the interface for managing a large fleet of device updates and how well does it integrate with your other device management needs

-

Getting started: how easy is it to add update capability to your design? Does it require your team to become experts on the update technology or can you easily integrate it and remain focused on your systems value-add?

There are many open source options available, including the project I’m involved with, Mender.io. Mender Hub has also been recently released, a community-supported repository to enable OTA on any board and operating system.

Next Steps

Let’s wrap up by discussing a few bigger picture items to consider early in your development process. These items are certainly not unique to IoT devices but considering them early can save a lot of pain and rework later.

Manufacturing Considerations

Make sure to involve a member of the team who will be responsible for manufacturing your devices early on in the discussions. Decisions that seem reasonable to us as system developers may have unexpected impacts on manufacturing time. As an example, consider that typically all devices will need a unique ID of some kind; this can be used to identify the device to your device management infrastructure and as a seed for cryptographic validation of the devices to ensure that only authorized devices can connect. One simple data point that can be used as the device ID is the MAC address of any onboard ethernet device (if you have one). In the lab, it is simple enough to boot the device into the target operating system to determine the MAC address. However, in manufacturing -requiring the system software to boot to complete the device assembly can add undue complexity, cost, and time to the assembly line. Where possible, the device ID data should be defined and deterministic without requiring the OS to boot.

Build Image Reproducibility

This exercise has focused on using the Debian OS as a prototyping platform for the IoT application. This is convenient because the system developers are likely already familiar with the environment and there is a large number of software tools to assist in the software development process. The workflow going from this to a production device is a bit awkward as it requires managing a golden-master installation that your application is then installed into along with required libraries, drivers etc. This gets to be troublesome as your development team grows and access to golden-master becomes a bottleneck.

Using the debootstrap tool from the Debian project is one step removed from the golden-master. Your build workflow changes such that you are installing a base system and your customizations into a subdirectory on your PC Debian install. With this tooling you can also install software for different CPU architectures.

One step beyond the debootstrap tool is to use a build system such as Yocto, Buildroot, or ISAR. These build systems consist of tooling to enable cross building all the packages needed for your target. The workflow with these systems requires that you develop recipes how to build the system and all required packages. These recipes, coupled with configuration data are used to cross-build the system from scratch. This removes the bottleneck on the golden master and allows independent developers to recreate the build from scratch when needed.

Security

The IoT market has a deserved reputation for producing products with glaring security flaws. Simple mistakes, such as reusing default credentials, leaving unnecessary services installed, and not providing an easy and automatic over-the-air update mechanism, have produced a large variety of IoT devices that are ripe for attack. Make sure to involve security engineers early in your design cycle to help guide your team. Also, keep in mind that the only truly secure software is that which is not installed. Make sure you minimize the software packages installed in your design to those critical to your defined use cases; avoid feature-creep and keep your scope well defined.

Conclusions

The Internet of Things is an exciting and growing industry, adding new functionality and use cases never before possible. The availability of low-cost hardware and software makes it extremely easy to get started building a design. Using the Beaglebone Black and the Debian operating system, it is very easy to get started with your system. With a bit of care and planning, you should be well on your way to developing the next great thing.

Author Bio

Drew is currently part of the Mender.io open source project to deploy OTA software updates to embedded Linux devices. He has worked on embedded projects such as RAID storage controllers, Direct and Network attached storage devices and graphical pagers.

He has spent the last 7 years working in Operating System Professional Services helping customers develop production embedded Linux systems. He has spent his career in embedded software and developer tools and has focused on Embedded Linux and Yocto for about 10 years. He is currently a Technical Solutions Engineer at Northern.Tech (the company behind the OSS project Mender.io), helping customers develop safer, more secure connected devices.

Drew has presented at many conferences, including OSCON, Embedded Linux Conference, Southern California Linux Expo (SCALE), Embedded Systems Conference, All Systems Go, and other technology conferences.