In Part 1 of our series, we got our local Kubernetes cluster up and running with Docker, Minikube, and kubectl. We set up an image repository, and tried building, pushing, and deploying a container image with code changes we made to the Hello-Kenzan app. It’s now time to automate this process.

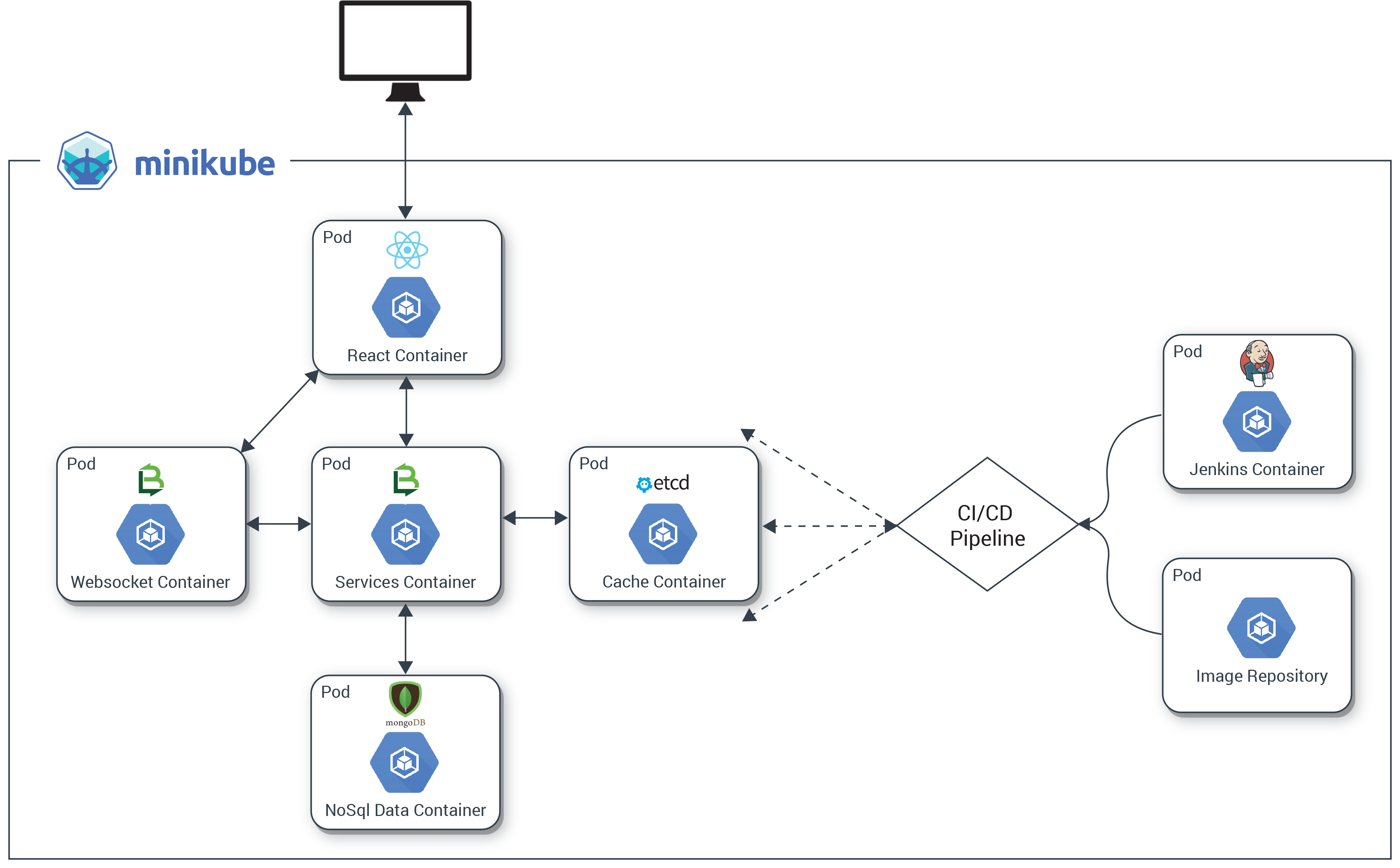

In Part 2, we’ll set up continuous delivery for our application by running Jenkins in a pod in Kubernetes. We’ll create a pipeline using a Jenkins 2.0 Pipeline script that automates building our Hello-Kenzan image, pushing it to the registry, and deploying it in Kubernetes. That’s right: we are going to deploy pods from a registry pod using a Jenkins pod. While this may sound like a bit of deployment alchemy, once the infrastructure and application components are all running on Kubernetes, it makes the management of these pieces easy since they’re all under one ecosystem.

With Part 2, we’re laying the last bit of infrastructure we need so that we can run our Kr8sswordz Puzzle in Part 3.

|

|

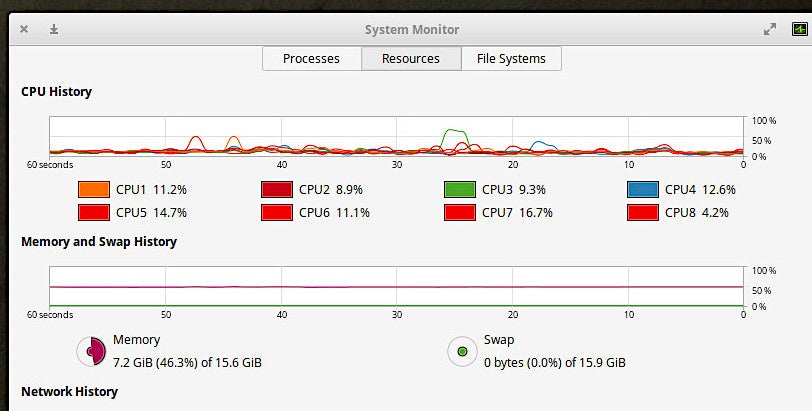

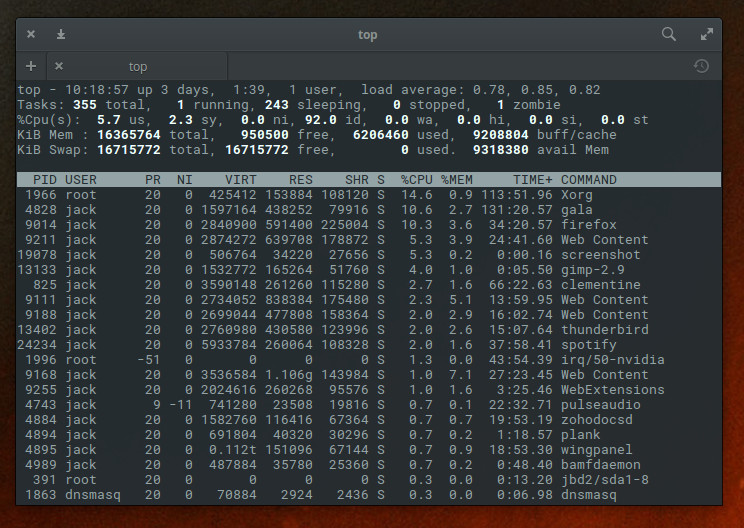

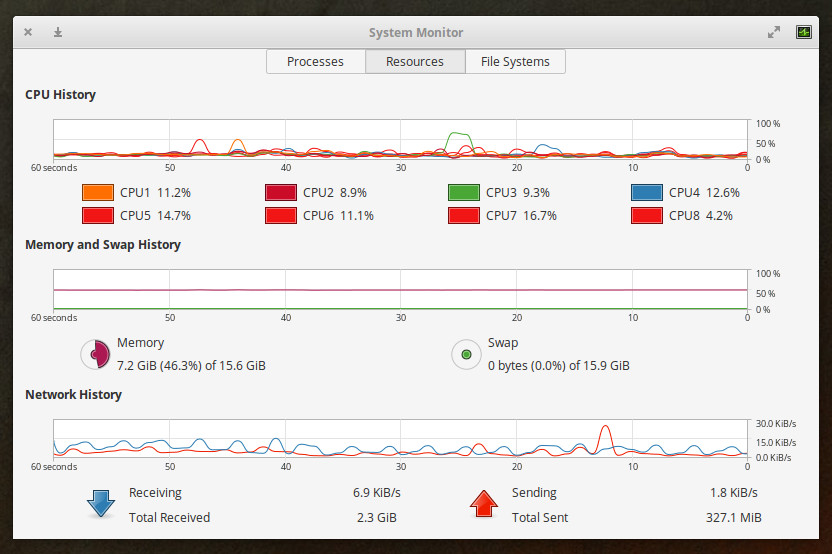

This tutorial only runs locally in Minikube and will not work on the cloud. You’ll need a computer running an up-to-date version of Linux or macOS. Optimally, it should have 16 GB of RAM. Minimally, it should have 8 GB of RAM. For best performance, reboot your computer and keep the number of running apps to a minimum. |

Creating and Building a Pipeline in Jenkins

Before you begin, you’ll want to make sure you’ve run through the steps in Part 1, in which we set up our image repository running in a pod (to do so quickly, you can run the npm part1 automated script detailed below).

If you previously stopped Minikube, you’ll need to start it up again. Enter the following terminal command, and wait for the cluster to start:

minikube start

You can check the cluster status and view all the pods that are running.

kubectl cluster-info kubectl get pods --all-namespaces

Make sure that the registry pod has a Status of Running.

We are ready to build out our Jenkins infrastructure.

Remember, you don’t actually have to type the commands below—just press Enter at each step and the script will enter the command for you!

1. First, let’s build the Jenkins image we’ll use in our Kubernetes cluster.

docker build -t 127.0.0.1:30400/jenkins:latest -f applications/jenkins/Dockerfile applications/jenkins

2. Once again we’ll need to set up the Socat Registry proxy container to push images, so let’s build it. Feel free to skip this step in case the socat-registry image already exists from Part 1 (to check, run docker images).

docker build -t socat-registry -f applications/socat/Dockerfile applications/socat

3. Run the proxy container from the image.

docker stop socat-registry; docker rm socat-registry; docker run -d -e "REG_IP=`minikube ip`" -e "REG_PORT=30400" --name socat-registry -p 30400:5000 socat-registry

|

|

This step will fail if local port 30400 is currently in use by another process. You can check if there’s any process currently using this port by running the command |

4. With our proxy container up and running, we can now push our Jenkins image to the local repository.

docker push 127.0.0.1:30400/jenkins:latest

You can see the newly pushed Jenkins image in the registry UI using the following command.

minikube service registry-ui

5. The proxy’s work is done, so you can go ahead and stop it.

docker stop socat-registry

6. Deploy Jenkins, which we’ll use to create our automated CI/CD pipeline. It will take the pod a minute or two to roll out.

kubectl apply -f manifests/jenkins.yaml; kubectl rollout status deployment/jenkins

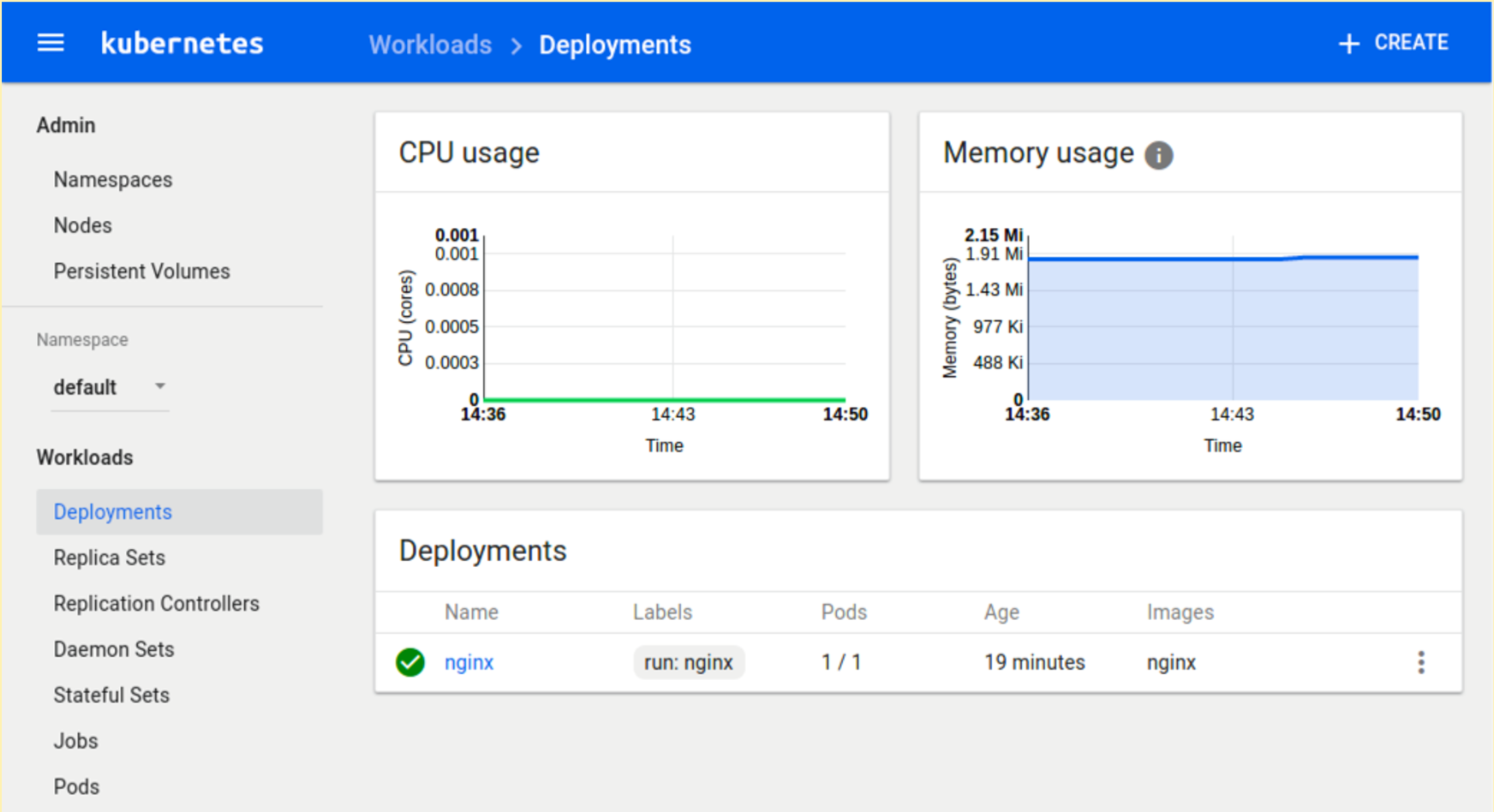

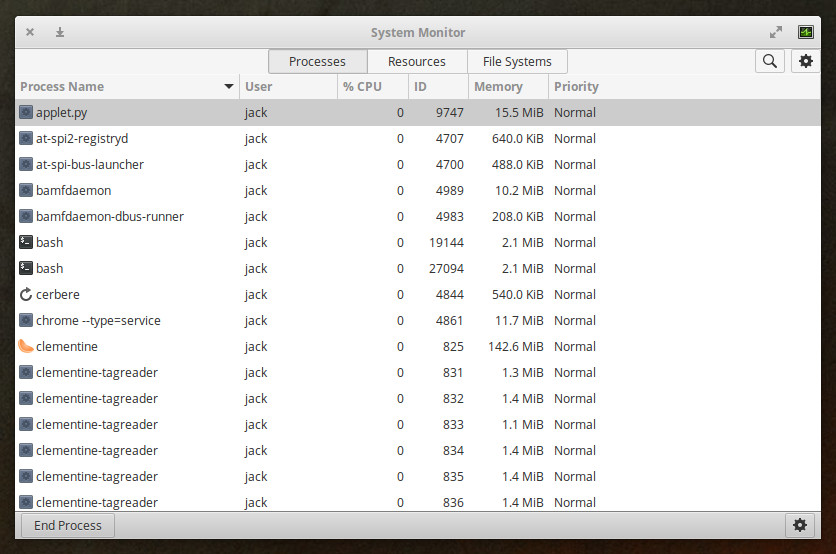

Inspect all the pods that are running. You’ll see a pod for Jenkins now.

kubectl get pods

|

|

Jenkins as a CD tool needs special rights in order to interact with the Kubernetes cluster, so we’ve setup RBAC (Role Based Access Control) authorization for it inside the jenkins.yaml deployment manifest. RBAC consists of a Role, a ServiceAccount and a Binding object that binds the two together. Here’s how we configured Jenkins with these resources: Role: For simplicity we leveraged the pre-existing ClusterRole “cluster-admin” which by default has unlimited access to the cluster. (In a real life scenario you might want to narrow down Jenkins’ access rights by creating a new role with the least privileged PolicyRule.) ServiceAccount: We created a new ServiceAccount named “Jenkins”. The property “automountServiceAccountToken” has been set to true; this will automatically mount the authentication resources needed for a kubeconfig context to be setup on the pod (i.e. Cluster info, User represented by a token and a Namespace). RoleBinding: We created a ClusterRoleBinding that binds together the “Jenkins” serviceAccount to the “cluster-admin” ClusterRole. Lastly, we tell our Jenkins deployment to run as the Jenkins ServiceAccount. |

|

|

Notice our Jenkins deployment has an initContainer. This is a container that will run to completion before the main container is deployed on our pod. The job of this init container is to create a kubeconfig file based on the provided context and to share it with the main Jenkins container through an “emptyDir” volume. |

7. Open the Jenkins UI in a web browser.

minikube service jenkins

8. Display the Jenkins admin password with the following command, and right-click to copy it.

kubectl exec -it `kubectl get pods --selector=app=jenkins --output=jsonpath={.items..metadata.name}` cat /var/jenkins_home/secrets/initialAdminPassword

9. Switch back to the Jenkins UI. Paste the Jenkins admin password in the box and click Continue. Click Install suggested plugins. Plugins have actually been pre-downloaded during the Jenkins image build, so this step should finish fairly quickly.

|

|

One of the plugins being installed is Kubernetes Continuous Deploy, which allows Jenkins to directly interact with the Kubernetes cluster rather than through kubectl commands. This plugin was pre-downloaded with the Jenkins image build. |

10. Create an admin user and credentials, and click Save and Continue. (Make sure to remember these credentials as you will need them for repeated logins.)

11. On the Instance Configuration page, click Save and Finish. On the next page, click Restart (if it appears to hang for some time on restarting, you may have to refresh the browser window). Login to Jenkins.

12. Before we create a pipeline, we first need to provision the Kubernetes Continuous Deploy plugin with a kubeconfig file that will allow access to our Kubernetes cluster. In Jenkins on the left, click on Credentials, select the Jenkins store, then Global credentials (unrestricted), and Add Credentials on the left menu

13. The following values must be entered precisely as indicated:

-

Kind: Kubernetes configuration (kubeconfig)

-

ID: kenzan_kubeconfig

-

Kubeconfig: From a file on the Jenkins master

-

File: /var/jenkins_home/.kube/config

Finally click Ok.

13. We now want to create a new pipeline for use with our Hello-Kenzan app. Back on Jenkins Home, on the left, click New Item.

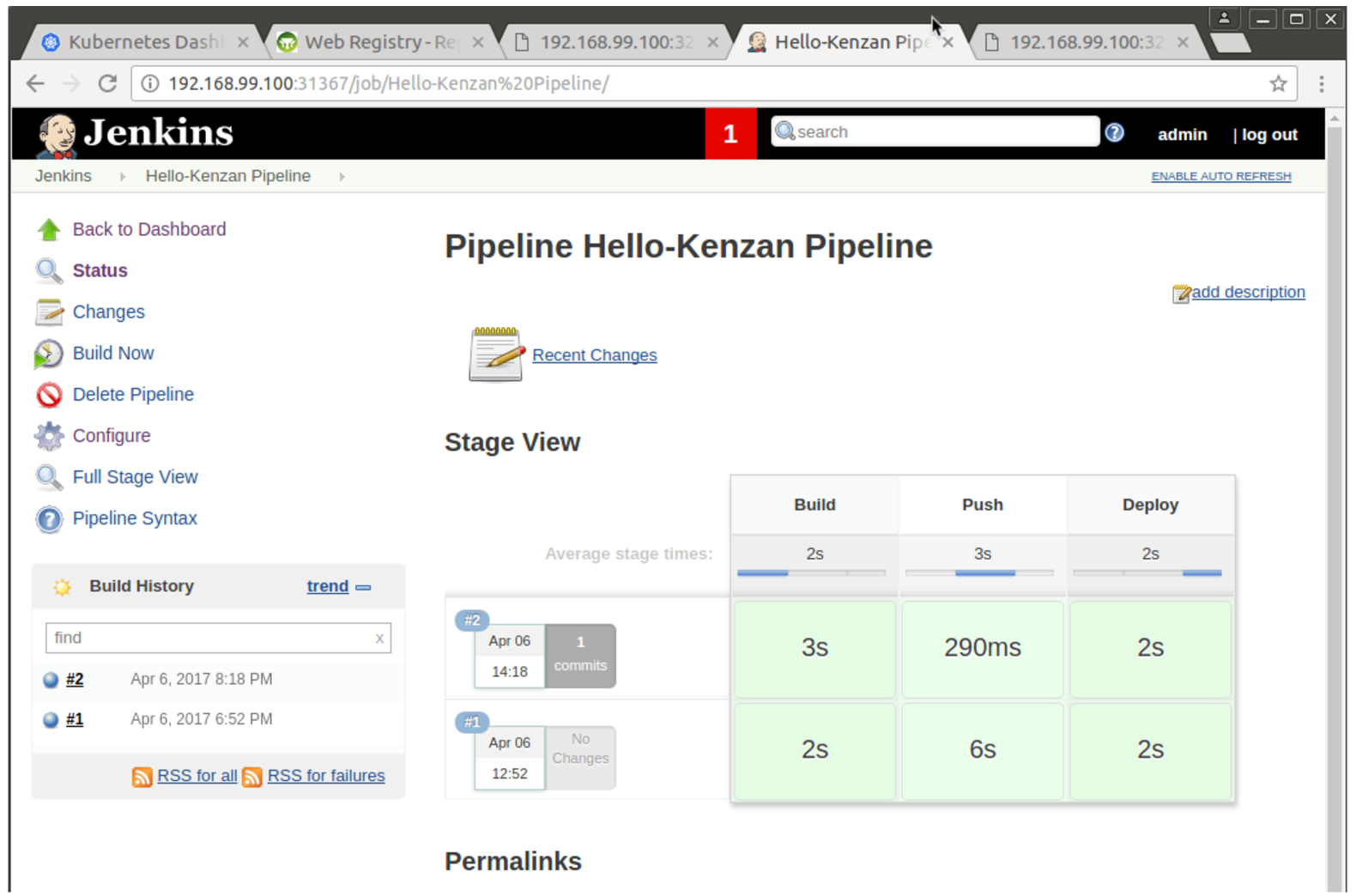

Enter the item name as Hello-Kenzan Pipeline, select Pipeline, and click OK.

14. Under the Pipeline section at the bottom, change the Definition to be Pipeline script from SCM.

15. Change the SCM to Git. Change the Repository URL to be the URL of your forked Git repository, such as https://github.com/[GIT USERNAME]/kubernetes-ci-cd.

|

|

Note for the Script Path, we are using a Jenkinsfile located in the root of our project on our Github repo. This defines the build, push and deploy steps for our hello-kenzan application. |

Click Save. On the left, click Build Now to run the new pipeline. You should see it run through the build, push, and deploy steps in a few seconds.

16. After all pipeline stages are colored green as complete, view the Hello-Kenzan application.

minikube service hello-kenzan

You might notice that you’re not seeing the uncommitted change you previously made to index.html in Part 1. That’s because Jenkins wasn’t using your local code. Instead, Jenkins pulled the code from your forked repo on GitHub, used that code to build the image, push it, and then deploy it.

Pushing Code Changes Through the Pipeline

Now let’s see some Continuous Integration in action! try changing the index.html in our Hello-Kenzan app, then building again to verify that the Jenkins build process works.

a. Open applications/hello-kenzan/index.html in a text editor.

nano applications/hello-kenzan/index.html

b. Add the following html at the end of the file (or any other html you like). (Tip: You can right-click in nano and choose Paste.)

<p style="font-family:sans-serif">For more from Kenzan, check out our

<a href="http://kenzan.io">website</a>.</p>

c. Press Ctrl+X to close the file, type Y to confirm the filename, and press Enter to write the changes to the file.

d. Commit the changed file to your Git repo (you may need to enter your GitHub credentials):

git commit -am "Added message to index.html" git push

In the Jenkins UI, click Build Now to run the build again.

18. View the updated Hello-Kenzan application. You should see the message you added to index.html. (If you don’t, hold down Shift and refresh your browser to force it to reload.)

minikube service hello-kenzan

And that’s it! You’ve successfully used your pipeline to automatically pull the latest code from your Git repository, build and push a container image to your cluster, and then deploy it in a pod. And you did it all with one click—that’s the power of a CI/CD pipeline.

If you’re done working in Minikube for now, you can go ahead and stop the cluster by entering the following command:

minikube stop

Automated ScriptsIf you need to walk through the steps we did again (or do so quickly), we’ve provided npm scripts that will automate running the same commands in a terminal. 1. To use the automated scripts, you’ll need to install NodeJS and npm. On Linux, follow the NodeJS installation steps for your distribution. To quickly install NodeJS and npm on Ubuntu 16.04 or higher, use the following terminal commands. a. curl -sL https://deb.nodesource.com/setup_7.x | sudo -E bash - b. sudo apt-get install -y nodejs 2. Change directories to the cloned repository and install the interactive tutorial script: a. cd ~/kubernetes-ci-cd b. npm install 3. Start the script npm run part1 (or part2, part3, part4 of the blog series) 4. Press Enter to proceed running each command. |

Up Next

In Parts 3 and 4, we will deploy our Kr8sswordz Puzzle app through a Jenkins CI/CD pipeline. We will demonstrate its use of caching with etcd, as well as scaling the app up with multiple puzzle service instances so that we can try running a load test. All of this will be shown in the UI of the app itself so that we can visualize these pieces in action.

Curious to learn more about Kubernetes? Enroll in Introduction to Kubernetes, a FREE training course from The Linux Foundation, hosted on edX.org.

This article was revised and updated by David Zuluaga, a front end developer at Kenzan. He was born and raised in Colombia, where he studied his BE in Systems Engineering. After moving to the United States, he studied received his master’s degree in computer science at Maharishi University of Management. David has been working at Kenzan for four years, dynamically moving throughout a wide range of areas of technology, from front-end and back-end development to platform and cloud computing. David’s also helped design and deliver training sessions on Microservices for multiple client teams.