Logging by nature is complex and in containerized environments there are new challenges that need to be addressed. In this article we will describe the current status of the Fluentd Ecosystem and how Fluent Bit (a Fluentd sub-project) is filling the gaps in cloud native environments. Finally we will do a global overview of the new Fluent Bit v0.13 release and its major improvements for Kubernetes users.

Fluentd Ecosystem

Fluentd is an open source log processor and aggregator hosted by the Cloud Native Computing Foundation. It was started in 2011 by Sadayuki Furuhashi (Treasure Data co-founder), who wanted to solve the common pains associated with logging in production environments, most of them related to unstructured messages, security, aggregation and customizable input and outputs within others. Since the beginning Fluentd was open source, and that decision was the key that allowed several contributors to continue expanding the product. Today there are more than 700 plugins available.

Fluentd community created not only plugins but also specific language connectors which developers could use to ship logs from their own custom applications to Fluentd over the network, the best examples are connectors for Python, Golang, NodeJS, Java, etc. From that moment it was not only about Fluentd as a single project, it was a complete Logging Ecosystem around which it continued expanding, including the container’s space. Fluentd Docker driver was the entry point and then, Red Hat contributed with a Kubernetes Metadata Filter for Fluentd which helped to make it a default citizen and reliable solution for Kubernetes clusters.

What does Kubernetes Filter in Fluentd? When applications run in Kubernetes, likely they are not aware about the context where they are running, so when they generate logging information there are missing pieces associated to the origin of each application log entry like: container id, container name, Pod Name, Pod ID, Annotations, Labels, etc. Kubernetes Filter enriches each application log in Kubernetes with a proper metadata.

Fluent Bit

While Fluentd ecosystem continue to grow, Kubernetes users were in need of specific improvements associated with performance, monitoring and flexibility. Fluentd is a strong and reliable solution for log processing aggregation, but the team was always looking for ways to improve the overall performance in the ecosystem: Fluent Bit born as a lightweight log processor to fill the gaps in cloud native environments.

Fluent Bit is a CNCF sub-project under the umbrella of Fluentd; written in C language and based in the design and experience of Fluentd, it has a pluggable architecture with built-in networking and security support. There are around 45 plugins available between inputs, filters and outputs. The most common used plugins in Fluent Bit are:

-

in_tail: read and parse log files from the file system

-

in_systemd: read logs from Systemd journal

-

in_syslog: get syslog messages over the network (TCP/UDP modes)

-

in_kmsg: read messages from the Linux Kernel log buffer

-

filter_kubernetes: enrich logs with Kubernetes metadata (labels, annotations)

-

filter_parser: convert unstructured to structured messages

-

filter_record_modifier: alter record content, append or remove keys

-

out_elasticsearch: send logs to a Elasticsearch database

-

out_http: send logs to a custom HTTP end-point

-

out_nats: send logs to a NATS streaming server

-

out_forward: send logs to a remote Fluentd

Fluent Bit was started almost 3 years ago, and in just the last year, more than 3 million of deployments had happened in Kubernetes clusters. The community around Fluentd and Kubernetes has been the key for it evolvement and positive impact in the ecosystem.

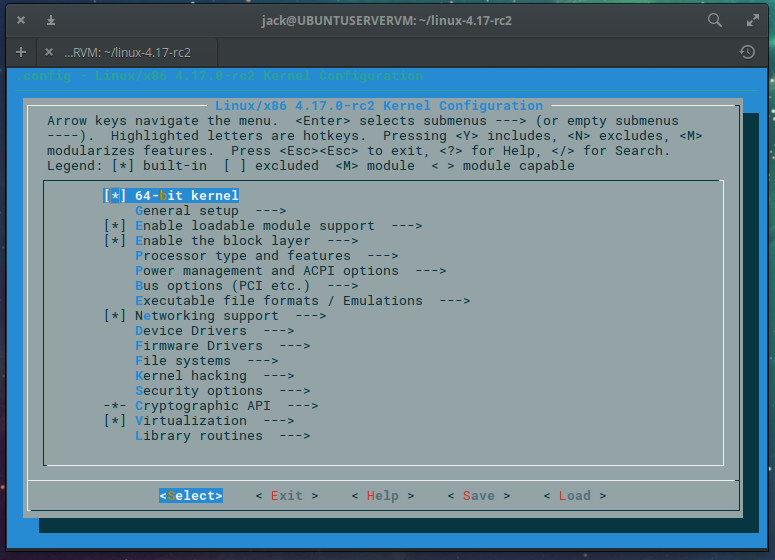

Fluent Bit in Kubernetes

Log processors, such as Fluentd or Fluent Bit, have to do some extra work in Kubernetes environments to get the logs enriched with proper metadata, an important actor is the Kubernetes API Server which provides such relevant information:

The model works pretty well but there are still some things raised by the community asking for improvement in certain areas:

-

Logs generated by Pods are encapsulated in JSON, but the original log message is likely unstructured. Also logs from an Apache Pod are different than a MySQL Pod, how to deal with different formats?

-

There are cases where would be ideal to exclude certain Pod logs, meaning: skip logs from Pod ABC.

-

Gather insights from the log processor. How to monitor it with Prometheus?

The new and exciting Fluent Bit 0.13 aims to address the needs described above, let’s explore a bit more about it.

Fluent Bit v0.13: What’s new?

In perfect timing, Fluent Bit 0.13 is being released at KubeCon+CloudNativeCon Europe 2018. Months of work between the developers and the community are bringing one of the most exciting versions available; here are the highlights.

Pods suggest a parser through a declarative annotation

From now Pods can suggest a pre-defined and known parser to the log processor, so a Pod running an Apache web server may suggest the following parser through a declarative annotation:

The new annotation fluent.io/parser allows to suggest the pre-defined parser apache to the log processor (Fluent Bit), so the data will be interpreted as a properly structured message.

Pods suggest to exclude the logs

If for some reason the logs from a specific logs should not be processed through the log processor, this can be suggested through the declarative annotation fluentbit.io/exclude: “true” :

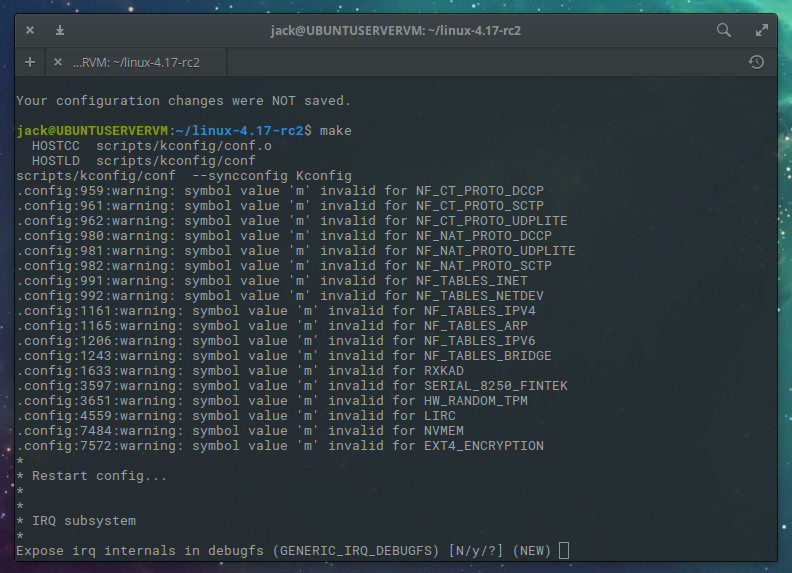

Monitor the Fluent Bit logging Pipeline

Fluent Bit 0.13 comes with built-in support for metrics which are exported through a HTTP end-point. By default the metrics and overall information are in JSON but also can be retrieved in Prometheus format, examples:

1. General information: $ curl -s http://127.0.0.1:2020 | jq

2. Overall Metrics in JSON: $ curl -s http://127.0.0.1:2020/api/v1/metrics | jq

3. Metrics in Prometheus Format: $ curl -s http://127.0.0.1:2020/api/v1/metrics/prometheus

4. Connecting Prometheus to Fluent Bit Metrics end-point

New Enterprise output connectors

Output connectors are an important piece of the logging pipeline; as part of 0.13 release we are adding the following plugins:

-

Apache Kafka

-

Microsoft Azure

-

Splunk

Meet Fluentd and Fluent Bit maintainers at KubeCon+CloudNativeCon Europe 2018!

Masahiro Nakagawa and Eduardo Silva, maintainers of Fluentd and Fluent Bit, will be at KubeCon presenting about the projects, engaging with the community and discussing roadmaps!, join us the conversation! Check out the schedule:

-

Fluentd Project Intro, May 2th, 11:55

-

Getting Started with Logging in Kubernetes: May 3th, 15:30

-

Fluentd / Fluent Bit deep dive, May 4th, 16:25

Also don’t forget to stop by the CNCF booth in the exhibitors hall!

Eduardo Silva is a Software Engineer at Treasure Data, he is part of the Open Source Fluentd Engineering team. He is passionate about scalability, performance and logging. One of his primary roles at Treasure Data is to maintain and develop Fluent Bit, a lightweight log processor for Cloud Native environments.

Learn more at at KubeCon + CloudNativeCon EU, May 2-4, 2018 in Copenhagen, Denmark.