If you’ve ever used an embedded Linux development device with wireless networking, you’ve likely benefited from the work of Marcel Holtmann, the maintainer of the BlueZ Bluetooth daemon since 2004, who spoke at an Embedded Linux Conference Europe panel in October.

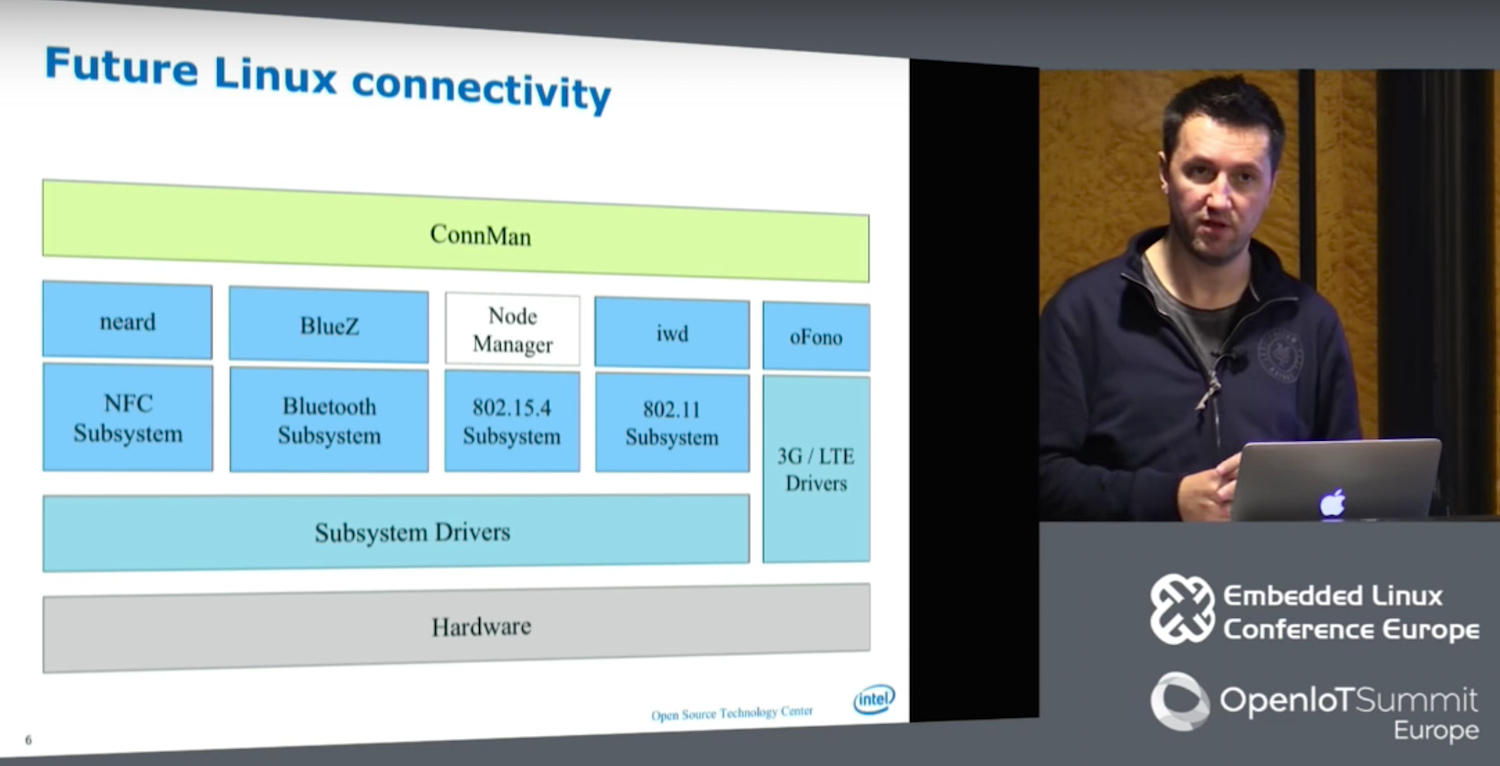

In 2007 Holtmann joined Intel’s Open Source Technology Center (OTC), where he created ConnMan (Internet connectivity), oFono (cellular telephony), and PACrunner (proxy handling). Over the last year, Holtmann and other OTC developers have been developing a replacement for the wpa_supplicant WiFi daemon called IWD (Internet Wireless Daemon). In the process, they have streamlined the entire Linux communications stack.

“We decided to create a wireless daemon that actually works on IoT devices,” said Holtmann in the presentation called “New Wireless Daemon for Linux.”

The IWD is now mostly complete, featuring a smaller footprint and more streamlined workflow than wpa_supplicant while adding support for the latest wireless technologies. The daemon was also developed with the help of the OTC’s Denis Kenzior, Andrew Zaborowski, Tim Kourt, Rahul Rahul, and Mat Martineau.

IWD aims to solve problems in wpa_supplicant including lack of persistence and limited feedback. “Wpa-supplicant doesn’t remember anything,” Holtmann told the ELCE audience in Berlin. “By comparison, like BlueZ, oFono, and neard [NFC], IWD is stateful, so whenever you repair the device, it remembers and restarts when you reboot. Wpa_supplicant does have a function that lets you redo the configuration network, but it’s so hackish and problematic that nobody uses it. Everyone stores this information at a higher layer, which complicates things and creates an imbalance.”

Wpa_supplicant manages to be overly comprehensive while also failing to reveal key information. The daemon is difficult to use because it adds support for “just about every OS or wireless extension,” including many things that are never actually used, says Holtmann. “The abstraction system actually gets in your way.”

Despite its capacity to “abstract everything,” wpa_supplicant does not expose much information. “You have to know a lot about WiFi and how things like parsing are done,” said Holtmann. “I just want to connect, not read a 2,000-page document to find out I have to use a pushbutton method to gain my credentials.”

Other limitations with wpa-supplicant include its dependence on blocking operations, in which the system must ask each peripheral for confirmation of operations before it moves on to ask other systems. This leads to “a system just waiting for something to happen,” says Holtmann.

Wpa-supplicant has other complications like “exposing itself to user space in a least four different ways,” said Holtmann. These include the antiquated D-Bus v1 and still problematic D-Bus v2, which “swallows states,” as well as a binder interface and CTL, “which is great for users, but for a daemon is horrible.”

To make up for the limitations of D-Bus v2, the overall wireless stack long ago spawned an abstraction layer above D-Bus and below ConnMan called gSupplicant, While this helped offload work from ConnMan, the latter was still overloaded.

Reducing Complexity

With the addition of IWD, Holtmann and his team removed GSupplicant entirely. It also replaced other user space interfaces with a single updated D-Bus layer. In addition, the new stack removed inoctl and lib Netlink (libnl), which Holtmann called “a blocking design that can’t track family changes.” Libnl was replaced with Generic Netlink, which does offer family discovery.

Holtmann also eliminated wireless extensions (wext) because “they’re broken and hopefully they will someday be removed from the kernel,” he said. The new wireless stack retains cfg80211 and nl80211 (Netlink), although the latter has been upgraded and pushed upstream.

The OTC team developed a new Embedded Linux Library (ELL) that features tables, queues, and ring buffers to reduce the complexity of IWD while still providing basic building blocks for netlink and D-Bus. “We extended ELL with cryptographic support libraries instead of using OpenSSL, which is huge, and is not an easy interface,” said Holtmann. “In a lot of cases you need only 10 percent of OpenSSL, so we went a different route and used random numbers using the getandom() system call, with no problems with boot up time.”

Finally, for ciphers and hashes Holtmann used AF_ALG, which he defined as “an encrypt interface for symmetric ciphers into the kernel.” With ELL and AF_ALG in place, the developers could eliminate OpenSSL, as well as gnuTLS and InternalTLS. The team also added tracing support for nl80211 with the help of a tool called iwmon.

“Now we can start scanning and selecting networks,” said Holtmann. “We can do active and passing scanning and SSDID grouping, and support open networks, We can connect to open access points and W2 and WPA/RSN protected access points. We have simple roaming, experimental D-Bus APIs, and EAPol, and ELL logging for D-Bus and Generic Netlink.”

Holtmann went on to discuss new support for enterprise WiFi technologies like X.509 certificates and TLS. Recent kernels have improved X.509 support, so the OTC team is exploiting the kernel’s keyrings to better manage certificates.

Future tasks include finishing up enterprise WiFi and developing a debug API. The developers are also looking at possible integrations with Passpoint 2.0, P2P, Miracast, and Neighborhood Aware Networking (NAN). Once IWD is complete, Holtmann and the OTC will address 802.15.4, bringing improvements to 802.15.4 compliant wireless protocols like ZigBee, 6LowPAN, and Thread.

Watch the complete video below:

Embedded Linux Conference + OpenIoT Summit North America will be held on February 21 – 23, 2017 in Portland, Oregon. Check out over 130 sessions on the Linux kernel, embedded development & systems, and the latest on the open Internet of Things.

Linux.com readers can register now with the discount code, LINUXRD5, for 5% off the attendee registration price. Register now>>