Docker is the excellent new container application that is generating much buzz and many silly stock photos of shipping containers. Containers are not new; so, what’s so great about Docker? Docker is built on Linux Containers (LXC). It runs on Linux, is easy to use, and is resource-efficient.

Docker is the excellent new container application that is generating much buzz and many silly stock photos of shipping containers. Containers are not new; so, what’s so great about Docker? Docker is built on Linux Containers (LXC). It runs on Linux, is easy to use, and is resource-efficient.

Docker containers are commonly compared with virtual machines. Virtual machines carry all the overhead of virtualized hardware running multiple operating systems. Docker containers, however, dump all that and share only the operating system. Docker can replace virtual machines in some use cases; for example, I now use Docker in my test lab to spin up various Linux distributions, instead of VirtualBox. It’s a lot faster, and it’s considerably lighter on system resources.

Docker is great for datacenters, as they can run many times more containers on the same hardware than virtual machines. It makes packaging and distributing software a lot easier:

“Docker containers wrap up a piece of software in a complete filesystem that contains everything it needs to run: code, runtime, system tools, system libraries — anything you can install on a server. This guarantees that it will always run the same, regardless of the environment it is running in.”

Docker runs natively on Linux, and in virtualized environments on Mac OS X and MS Windows. The good Docker people have made installation very easy on all three platforms.

Installing Docker

That’s enough gasbagging; let’s open a terminal and have some fun. The best way to install Docker is with the Docker installer, which is amazingly thorough. Note how it detects my Linux distro version and pulls in dependencies. The output is abbreviated to show the commands that the installer runs:

$ wget -qO- https://get.docker.com/ | sh

You're using 'linuxmint' version 'rebecca'.

Upstream release is 'ubuntu' version 'trusty'.

apparmor is enabled in the kernel, but apparmor_parser missing

+ sudo -E sh -c sleep 3; apt-get update

+ sudo -E sh -c sleep 3; apt-get install -y -q apparmor

+ sudo -E sh -c apt-key adv --keyserver hkp://p80.pool.sks-keyservers.net:80

--recv-keys 58118E89F3A912897C070ADBF76221572C52609D

+ sudo -E sh -c mkdir -p /etc/apt/sources.list.d

+ sudo -E sh -c echo deb https://apt.dockerproject.org/repo ubuntu-trusty main > /etc/apt/sources.list.d/docker.list

+ sudo -E sh -c sleep 3; apt-get update; apt-get install -y -q docker-e

The following NEW packages will be installed:

docker-engine

As you can see, it uses standard Linux commands. When it’s finished, you should add yourself to the docker group so that you can run it without root permissions. (Remember to log out and then back in to activate your new group membership.)

Hello World!

We can run a Hello World example to test that Docker is installed correctly:

$ docker run hello-world

Unable to find image 'hello-world:latest' locally

latest: Pulling from library/hello-world

[snip]

Hello from Docker.

This message shows that your installation appears to be working correctly.

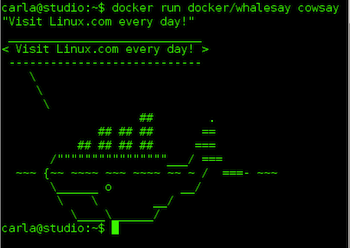

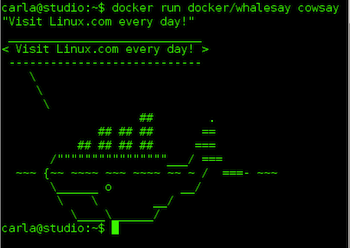

This downloads and runs the hello-world image from the Docker Hub. This contains a library of Docker images, which you can access with a simple registration. You can also upload and share your own images. Docker provides a fun test image to play with, Whalesay. Whalesay is an adaption of Cowsay that draws the Docker whale instead of a cow (see Figure 1 above).

$ docker run docker/whalesay cowsay "Visit Linux.com every day!"

The first time you run a new image from Docker Hub, it gets downloaded to your computer. Then, after that Docker uses your local copy. You can see which images are installed on your system.

$ docker images

REPOSITORY TAG IMAGE ID CREATED VIRTUAL SIZE

hello-world latest 0a6ba66e537a 7 weeks ago 960 B

docker/whalesay latest ded5e192a685 6 months ago 247 MB

So, where, exactly, are these images stored? Look in /var/lib/docker.

Build a Docker Image

Now let’s build our own Docker image. Docker Hub has a lot of prefab images to play with (Figure 2), and that’s the best way to start because building one from scratch is a fair bit of work. (There is even an empty scratch image for building your image from the ground up.) There are many distro images, such as Ubuntu, CentOS, Arch Linux, and Debian.

We’ll start with a plain Ubuntu image. Create a directory for your Docker project, change to it, and create a new Dockerfile with your favorite text editor.

$ mkdir dockerstuff

$ cd dockerstuff

$ nano Dockerfile

Enter a single line in your Dockerfile:

FROM ubuntu

Now build your new image and give it a name. In this example the name is testproj. Make sure to include the trailing dot:

$ docker build -t testproj .

Sending build context to Docker daemon 2.048 kB

Step 1 : FROM ubuntu

---> 89d5d8e8bafb

Successfully built 89d5d8e8bafb

Now you can run your new Ubuntu image interactively:

$ docker run -it ubuntu

root@fc21879c961d:/#

And there you are at the root prompt of your image, which in this example is a minimal Ubuntu installation that you can run just like any Ubuntu system. You can see all of your local images:

$ docker images

REPOSITORY TAG IMAGE ID CREATED VIRTUAL SIZE

testproj latest 89d5d8e8bafb 6 hours ago 187.9 MB

ubuntu latest 89d5d8e8bafb 6 hours ago 187.9 MB

hello-world latest 0a6ba66e537a 8 weeks ago 960 B

docker/whalesay latest ded5e192a685 6 months ago 247 MB

The real power of Docker lies in creating Dockerfiles that allow you to create customized images and quickly replicate them whenever you want. This simple example shows how to create a bare-bones Apache server. First, create a new directory, change to it, and start a new Dockerfile that includes the following lines.

FROM ubuntu

MAINTAINER DockerFan version 1.0

ENV DEBIAN_FRONTEND noninteractive

ENV APACHE_RUN_USER www-data

ENV APACHE_RUN_GROUP www-data

ENV APACHE_LOG_DIR /var/log/apache2

ENV APACHE_LOCK_DIR /var/lock/apache2

ENV APACHE_PID_FILE /var/run/apache2.pid

RUN apt-get update && apt-get install -y apache2

EXPOSE 8080

CMD ["/usr/sbin/apache2ctl", "-D", "FOREGROUND"]

Now build your new project:

$ docker build -t apacheserver .

This will take a little while as it downloads and installs the Apache packages. You’ll see a lot of output on your screen, and when you see “Successfully built 538fea9dda79” (but with a different number, of course) then your image built successfully. Now you can run it. This runs it in the background:

$ docker run -d apacheserver

8defbf68cc7926053a848bfe7b55ef507a05d471fb5f3f68da5c9aede8d75137

List your running containers:

$ docker ps

CONTAINER ID IMAGE COMMAND CREATED

8defbf68cc79 apacheserver "/usr/sbin/apache2ctl" 34 seconds ago

And kill your running container:

$ docker kill 8defbf68cc79

You might want to run it interactively for testing and debugging:

$ docker run -it apacheserver /bin/bash

root@495b998c031c:/# ps ax

PID TTY STAT TIME COMMAND

1 ? Ss 0:00 /bin/bash

14 ? R+ 0:00 ps ax

root@495b998c031c:/# apachectl start

AH00558: apache2: Could not reliably determine the server's fully qualified

domain name, using 172.17.0.3. Set the 'ServerName' directive globally to

suppress this message

root@495b998c031c:/#

A more comprehensive Dockerfile could install a complete LAMP stack, load Apache modules, configuration files, and everything you need to launch a complete Web server with a single command.

We have come to the end of this introduction to Docker, but don’t stop now. Visit docs.docker.com to study the excellent documentation and try a little Web searching for Dockerfile examples. There are thousands of them, all free and easy to try.

Intrinsyc has launched three Android 6.0 dev kits — phone, tablet, and board — for Qualcomm’s 14nm Snapdragon 820, with four Cortex-A72-like cores. Qualcomm announced its Snapdragon 820 system-on-chip in November with a promise that more than 60 phones and tablets will ship with it in 2016. This quad-core, Cortex-A72 like design with cutting edge 14nm fabrication process will also be available for high-end embedded devices ranging from automotive computers to robots to computer vision devices.

Intrinsyc has launched three Android 6.0 dev kits — phone, tablet, and board — for Qualcomm’s 14nm Snapdragon 820, with four Cortex-A72-like cores. Qualcomm announced its Snapdragon 820 system-on-chip in November with a promise that more than 60 phones and tablets will ship with it in 2016. This quad-core, Cortex-A72 like design with cutting edge 14nm fabrication process will also be available for high-end embedded devices ranging from automotive computers to robots to computer vision devices.

Red Hat Inc. is targeting developers with a new dedicated cloud platform for coders. The

Red Hat Inc. is targeting developers with a new dedicated cloud platform for coders. The  Docker is the excellent new container application that is generating much buzz and many silly stock photos of shipping containers. Containers are not new; so, what’s so great about Docker? Docker is built on

Docker is the excellent new container application that is generating much buzz and many silly stock photos of shipping containers. Containers are not new; so, what’s so great about Docker? Docker is built on

Strengthening its push into the Internet of Things, IBM is making a range of application programming interfaces (APIs) available through its Watson IoT unit and opening up new facilities for the group. The unit, formed earlier this year with a US$3 billion investment into IoT, will have its global headquarters in Munch, IBM announced Tuesday.

Strengthening its push into the Internet of Things, IBM is making a range of application programming interfaces (APIs) available through its Watson IoT unit and opening up new facilities for the group. The unit, formed earlier this year with a US$3 billion investment into IoT, will have its global headquarters in Munch, IBM announced Tuesday.