“If you’re a bit tired, this is a presentation on cache maintenance, so there will be plenty of opportunity to sleep.” Despite this warning from ARM Ltd. kernel developer Mark Rutland at his recent Embedded Linux Conference presentation, Stale Data, or How We (Mis-)manage Modern Caches, it was actually kind of an eye opener — at least as far as cache management presentations go.

For one thing, much of what you think you know about the subject is probably wrong. It turns out that software — and computer education curricula — have not always kept up with new developments in hardware. “Cache behavior is surprisingly complex, and caches behave in subtly different ways across SoCs,” Rutland told the ELC audience. “It’s very easy to misunderstand the rules of how caches work and be lulled into a false sense of security.”

SoC Tricks

Even within a single chip architecture, every system-on-chip (SoC) is integrated slightly differently. Modern SoCs perform a number of tricks, such as speculation, to offer better power/performance, and it is easy for the unwary developer to be surprised by their side effects on caches.

“By hitting the cache, you can avoid putting traffic on the memory interconnect, which can be clocked at a lower speed,” said Rutland. “The CPU can do more work more quickly and go to sleep more quickly. Modern CPUs do fewer write-backs to memory and try to keep data in caches for as long as possible. They allow multiple copies of memory locations to exist.”

Almost every CPU does some automatic prefetching and buffering of stores, and many also do out of order execution and some level of speculation. As a result, “your code might not match the reality of what the CPU happens to be doing,” said Rutland. “The CPU might speculate something completely erroneously, and start preloading data into caches, and it might turn out that those accesses never existed in your code. It’s incredibly nondeterministic.”

Not only is this behavior “really difficult” to predict, but “over time the CPU gets more aggressive, so it will be even more difficult,” added Rutland. Other trends that add to the complexity are the growth in multi-core SMP systems and complex configurations such as big.LITTLE, in which different CPU implementations can exist within a single system. Newer technologies like coherent DMA masters can solve some cache problems but also add more factors to juggle. “We’re also beginning to see things like GPUs accessing memory a lot in weird and varied patterns,” said Rutland.

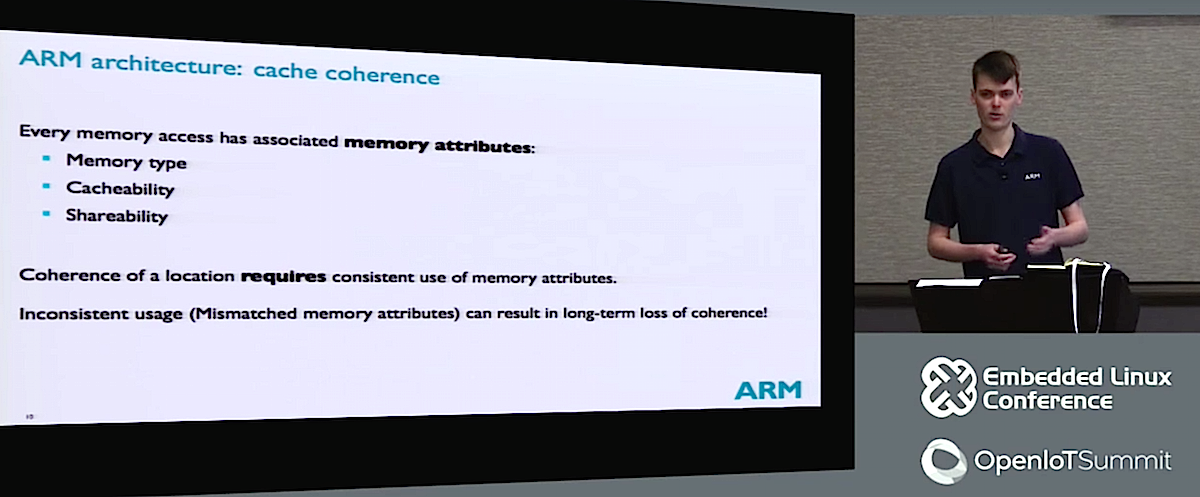

In this chaotic environment, it can be difficult to determine cache coherence, which Rutland defines as “two accesses appearing to use the same copy, rather than the caches themselves having the same property of data.” However, as Rutland noted, all this hardware follows a common set of rules when it comes to the behavior of caches, which can be simpler to think about.

When following these rules, “you have to be a lot more stringent in your cache management to make sure you get what you expect,” said Rutland. “With all these complex cache coherence protocols, misuse or inconsistent use can lead to a long-term loss of coherence. It’s incredibly important to reason about the behavior of the caches in the background and understand what cache maintenance primitives are available.”

Explaining the Mystery of Caches

Rutland then set out to explain the mystery of caches, at least on modern multi-core ARMv8 SoCs. Most of the guidelines, which combine ARM Reference Manual rules and street wisdom about CPU caching behavior, are also applicable to ARMv7.

Rutland started by discussing the options for the cache-ability for the normal memory location: non-cacheable, write-through, and write-back. Write-through, which is typically used in frame buffers, “means that when you write to a location, both memory and caches are updated at the same time, but reads might only look in caches,” said Rutland. Operating systems typically use write-back, where “you don’t really care if the memory is up to date.” These techniques are controlled separately for inner caches near a CPU cluster vs. outer caches.

Rutland went on to cover shareability domains, which include non-, inner-, outer-, and system-shareability. Single OSes or hypervisors typically use inner-shareability whereas outer-shareability domains allow for multiple independent OSes running on a large, complex, multi-core system.

Then there are cache states to keep in mind, as well as cache coherence protocols such as MSI. “It’s very difficult to reason about the precise state of caches because they may have coherence protocol specific data associated with cache entries,” said Rutland.

Cache states can be grouped into invalid, clean, and dirty. As you might expect, the most challenging is the dirty state. “Caches can write back dirty lines at any time for any reason, such as making space for things that they erroneously speculated,” said Rutland.

Surprisingly to many, caches are never fully turned off in ARM systems. “Even when the MMU is off or the CPU isn’t making cacheable accesses, data can still sit in the cache or dirty data can be written back at any arbitrary point in time,” said Rutland.

Cache Maintenance

Rutland went on to discuss different types of cache maintenance operations, including clean – a sort of double-check on the clean cache state — and an invalidate operation that deletes data from the cache. There’s also a clean+invalidate operation that combines the two. In ARM, there is no such thing, however, as “flushing,” he added, noting the term was ambiguous. “People should stop talking about flushing the caches. It means absolutely nothing.”

Rutland also warned against using the popular Set/Way instructions for defined power up/down cache management unless one is intimately aware of how that particular CPU behaves with Set/Way. Long story short: “Misuse of Set/Way can result in a complete loss of coherence…and cause horrible problems,” said Rutland. “Instead you should use VA [virtual address] cache maintenance, which gets a set of memory attributes from the MMU, including the share-ability domain of that VA.”

After answering questions from the audience, Rutland concluded with: “Thank you for staying awake.” You’re welcome, but it’s tough sleeping when hearing about the horrors of modern cache maintenance.

Watch the complete presentation below: